If an eighth grade student in California shared a photo of a naked classmate with friends without consent, the student could possibly be prosecuted under state laws dealing with child pornography and disorderly conduct.

However, if the photo is an AI-generated deepfake, it is not clear what any state law would apply.

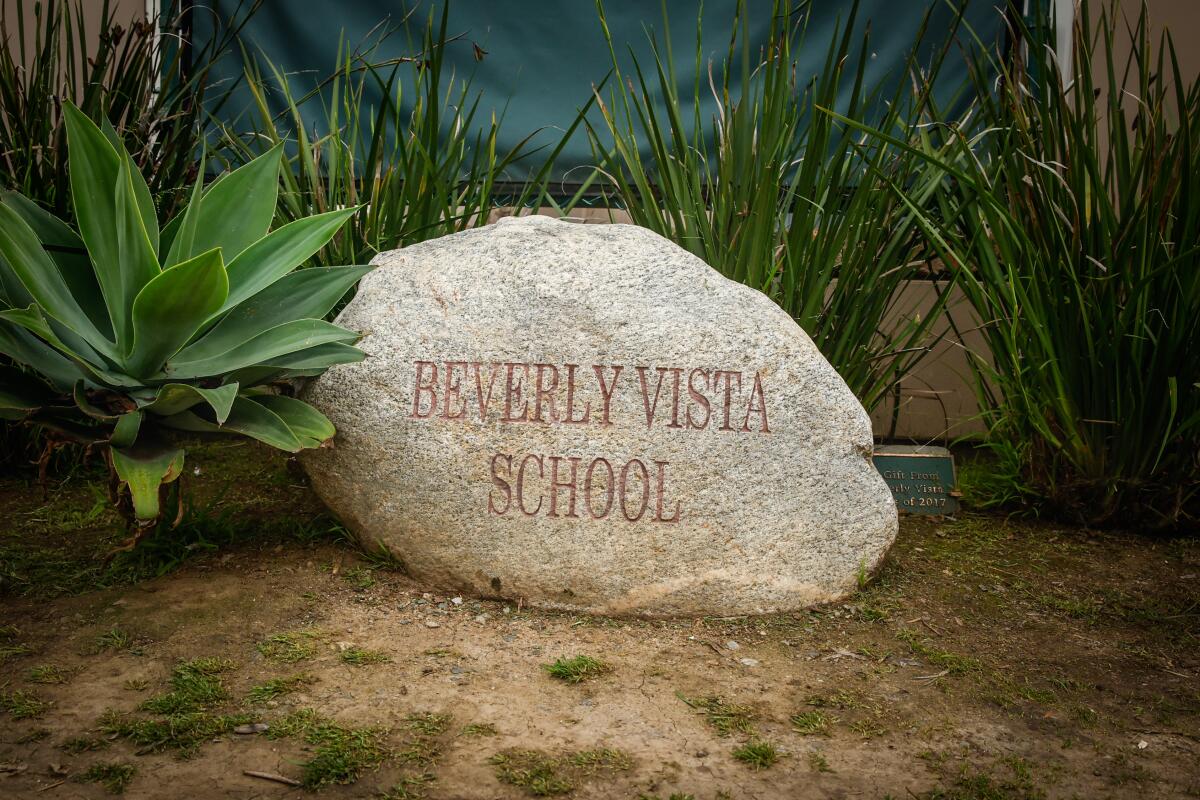

That's the dilemma facing the Beverly Hills Police Department as it investigates a group of Beverly Vista High School students who allegedly shared photos of classmates that had been doctored with an app powered by artificial intelligence. According to the district, the images used real faces of students over AI-generated naked bodies.

Lt. Andrew Myers, Beverly Hills police spokesman, said no arrests have been made and the investigation continues.

Security guards stand outside Beverly Vista High School on February 26 in Beverly Hills.

(Jason Armond / Los Angeles Times)

Superintendent of the Beverly Hills Unified School District. Michael Bregy said the district's investigation into the episode is in its final stages.

“Disciplinary action was taken immediately and we are pleased that this was a contained and isolated incident,” Bregy said in a statement, although no information was disclosed about the nature of the action, the number of students involved or their grade level.

He called on Congress to prioritize the safety of children in the United States, adding that “technology, including artificial intelligence and social media, can be used in incredibly positive ways, but like cars and cigarettes at first “If they are not regulated, they are completely destructive.”

However, the technology involved complicates whether fake nudes constitute a criminal offense.

Federal law includes computer-generated images of identifiable people in the prohibition of child pornography. Although the ban seems clear, legal experts warn that it still needs to be tested in court.

California's child pornography law does not mention artificially generated images. Instead, it applies to any image that “depicts a person under the age of 18 personally engaging in or simulating sexual conduct.”

Joseph Abrams, a Santa Ana criminal defense attorney, said an AI-generated nude “does not represent a real person.” It could be defined as child erotica, he said, but not child pornography. And from his standpoint as a defense attorney, he said, “I don't think it crosses the line of this particular statute or any other statute.”

“As we enter this era of AI,” Abrams said, “these types of issues are going to have to be litigated.”

Kate Ruane, director of the free expression project at the Center for Democracy and Technology, said early versions of digitally altered child sexual abuse material superimposed a child's face on a pornographic image of another person's body. Now, however, apps and other freely available “undressing” programs generate fake bodies that match real faces, raising legal questions that have not yet been fully addressed, she said.

Still, he said, he struggled to understand why the law wouldn't cover sexually explicit images just because they were artificially generated. “The damage we were trying to address [with the prohibition] It is the harm to the child that the existence of the image entails. Exactly the same thing happens here,” said Ruane.

However, there is another obstacle to criminal charges. In both the state and federal cases, the ban applies only to “sexually explicit conduct,” which boils down to sexual intercourse, other sexual acts, and “lewd” displays of a child's private parts.

Courts use a six-prong test to determine whether something is lewd exposure, considering aspects such as what the image focuses on, whether the pose is natural, and whether the image is intended to arouse the viewer. A court would have to weigh those factors when evaluating images that were not sexual in nature before being “stripped” by the AI.

“It's really going to depend on what the final photo looks like,” said Sandy Johnson, senior legislative policy counsel for the Rape, Abuse and Incest National Network, the largest anti-sexual violence organization in the United States. “It's not just about nude photos.”

The age of the children involved would not be a defense against a conviction, Abrams said, because “children have no more rights than adults to possess child pornography.” But like Johnson, he noted that “photos of naked children are not necessarily child pornography.”

Neither the Los Angeles County district attorney's office nor the state Department of Justice immediately responded to requests for comment.

State lawmakers have proposed several bills to fill gaps in the law on generative AI. These include proposals to extend criminal prohibitions on the possession of child pornography and the non-consensual distribution of intimate images (also known as “revenge porn”) to computer-generated images and convene a working group of academics to advise legislators on “relevant issues and Impacts of artificial intelligence and deepfakes.”

Members of Congress have competing proposals that would expand federal criminal and civil penalties for the nonconsensual distribution of AI-generated intimate images.

At Tuesday's meeting of the district's Board of Education, Dr. Jane Tavyev Asher, director of pediatric neurology at Cedars-Sinai, asked the board to consider the consequences of “giving our children access to so much technology” in and out of the classroom. .

Beverly Vista High School on Feb. 26 in Beverly Hills.

(Jason Armond / Los Angeles Times)

Instead of having to interact and socialize with other students, Asher said, students are allowed to spend their free time at school on their devices. “If they're on the screen all day, what do you think they'll want to do at night?”

Research shows that children under 16 should not use social media, he said. Noting how the district was blindsided by reports of AI-generated nudity, she warned: “There will be more things that will surprise us, because the technology will develop at a faster pace than we can imagine.” , and we have to protect our children from it.”

Board members and Brégy expressed outrage at the meeting over the images. “This has just shaken the foundation of trust and confidence that we work every day to create for all of our students,” Bregy said, though she added, “We have very resilient students and they seem happy and a little confused about what's going on.” “. happening.”

“I ask that parents continually look at their [children’s] phones, what apps are on their phones, what they send, what social media sites they use,” he said. These devices are “opening the door to many new technologies that are appearing without any regulation.”

Board member Rachelle Marcus noted that the district has banned students from using their phones at school, “but these kids come home after school and that's where the problem starts. We parents need to take more control of what our students do with their phones, and that's where I think we're completely failing.”

“The missing link right now, from my perspective, is the partnership with parents and families,” said board member Judy Manouchehri. “We have dozens and dozens of programs aimed at keeping your kids off the phone in the afternoon.”