- Hugging Face has launched Huggingsnap, an iOS application that can analyze and describe what your iPhone's camera see.

- The application works offline, without sending data to the cloud.

- Huggingsnap is imperfect, but demonstrates what can be done completely on the device.

Packing the AI is becoming increasingly common as tools such as Chatgpt, Microsoft Copilot and Google Gemini threw glasses for their AI tools. Hugging Face has just launched its own turn in the idea with a new iOS application called Huggingsnap that offers to look at the world through its iPhone's camera and describe what you see without connecting with the cloud.

Think about it how to have a personal tour guide that knows how to keep your mouth closed. Huggingsnap is run completely out of line using the internal vision model of Hugging FACE, SMolrolm2, to enable the recognition of instant objects, the descriptions of scenes, the text reading and the general observations on their environment without any of their data being sent to the Internet ring.

This out -of -line capacity makes Huggingsnap particularly useful in situations where connectivity is irregular. If you are walking through the desert, travel abroad without reliable Internet, or simply in one of those halls of groceries where the cell service mysteriously disappears, then having the capacity on your phone is a real blessing. In addition, the application claims to be super efficient, which means that it will not drain its battery as do the models of the cloud based.

Huggingsnap looks at my world

I decided to turn the application. First, I pointed out on the screen of my laptop while my browser was in my techradar biography. At first, the application made a solid job transcribing the text and explaining what he saw. However, he moved away from reality when he saw the headlines and other details around my biography. Huggingsnap thought that the references to the new computer chips in a headline were an indicator of what my laptop drives, and seemed to think that some of the names in the headlines indicated that other people who use my laptop.

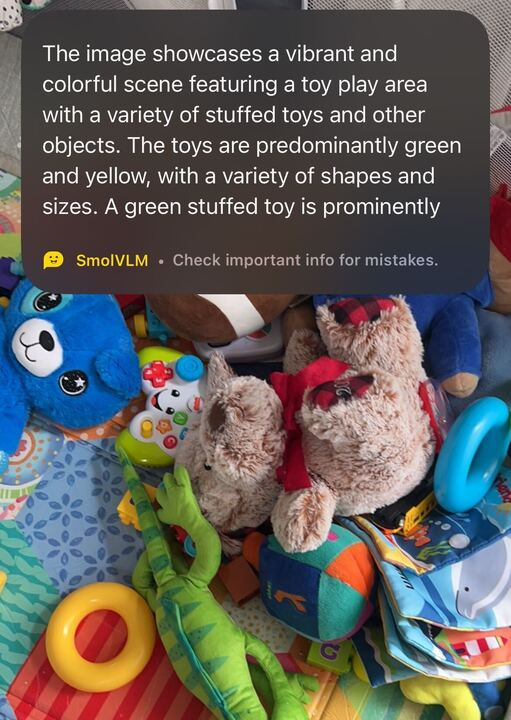

Then I pointed to my camera to my son's playpen full of toys that I had not yet cleaned. Again, AI did a great job with wide blows when describing the game area and toys inside. He obtained the colors and even the textures just when identifying stuffed toys versus blocks. It also fell into some of the details. For example, he called a dog a dog and seemed to think that a stacking ring was a ball. In general, I would call Huggingsnap Great to describe a scene to a friend, but not good enough for a police report.

See the future

The focus on the Huggingsnap device stands out from the incorporated skills of its iPhone. While the device can identify plants, copy text text and tell you if that spider on its wall is of the type that should make it move, you almost always have to send information to the cloud.

Huggingsnap is remarkable in a world where most applications want to track everything of their blood. That said, Apple is investing largely in the device for its future iPhones. But for now, if you want privacy with your vision of AI, Huggingsnap could be perfect for you.