Midjourney, the generative AI platform you can currently use on Discord, just introduced the concept of reusable characters and I'm impressed.

It's a simple idea: Instead of using prompts to create countless variations of generative images, you create and reuse a central character to illustrate all your themes, live out your wildest fantasies, and maybe tell a story.

Until recently, Midjourney, which is trained on a diffusion model (adding noise to an original image and having the model remove the noise so it can learn about the image) could create some beautiful and surprisingly realistic images based on cues you entered. the Discord channel (“/imagine: [prompt]”) but unless you ask it to alter one of its generated images, each set of images and character will look different.

Now, Midjourney has come up with an easy way to reuse your Midjourney AI characters. I tried it and for the most part it works.

In one post, I described someone who looked a bit like me, chose my favorite of Midjourney's four generated image options, zoomed it in for more definition, and then, using a new “-cref” post and my URL generated image (with the character I liked), I forced Midjounrey to generate new images but with the same AI character in them.

Later I described a character in the style of Charles Schulz. Misery character qualities and, once I had one I liked, I reused it in a different scenario where he had his kite stuck in a tree (Midjourney couldn't or wouldn't put the kite in the branches of the tree).

It's far from perfect. Midjourney still tends to overtighten the art, but I maintain that the characters in the new images are the same ones I created in my initial images. The more descriptive your initial character creation prompts are, the better results you'll get in subsequent images.

Perhaps the most surprising thing about the Midjourney update is the sheer simplicity of the creative process. Writing prompts in natural language has always been easy, but training the system to make your character do something can typically require some programming or even experience with AI models. Here it's just a simple message, code and image reference.

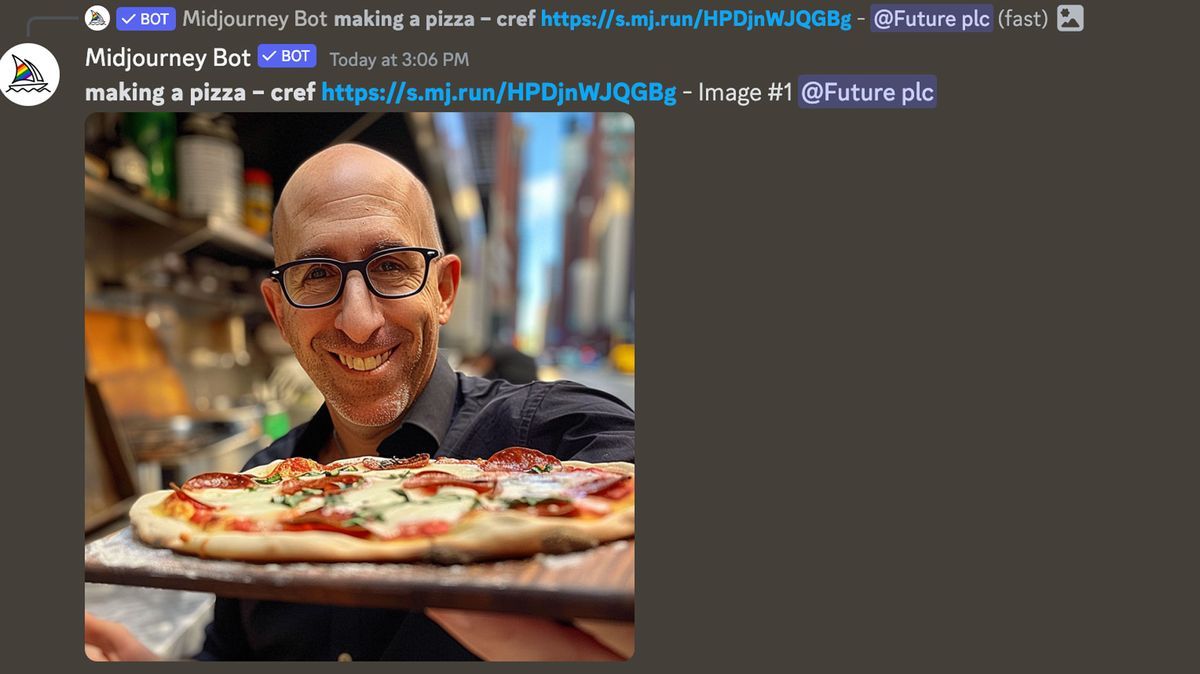

While it's easier to take one of Midjourney's creations and use it as a pivotal character, I decided to see what Midjourney would do if I became a character using the same “cref” message. I found a photo of myself online and entered this message: “imagine: making a pizza – cref [link to a photo of me]”.

Midjourney quickly spit out an interpretation of me making a pizza. At best, it is my essence. I selected the least objectionable one and then crafted a new message using the URL of my favorite self.

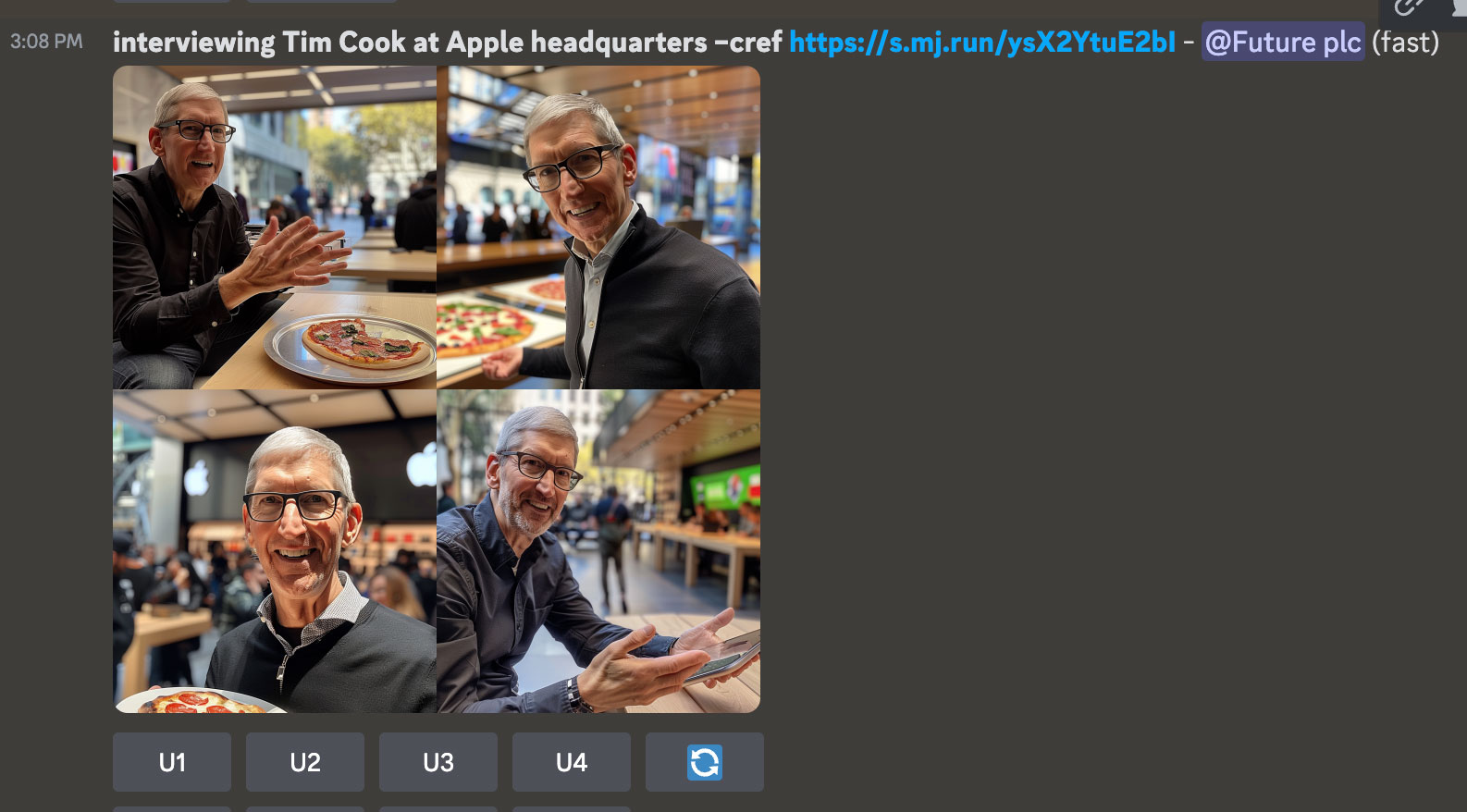

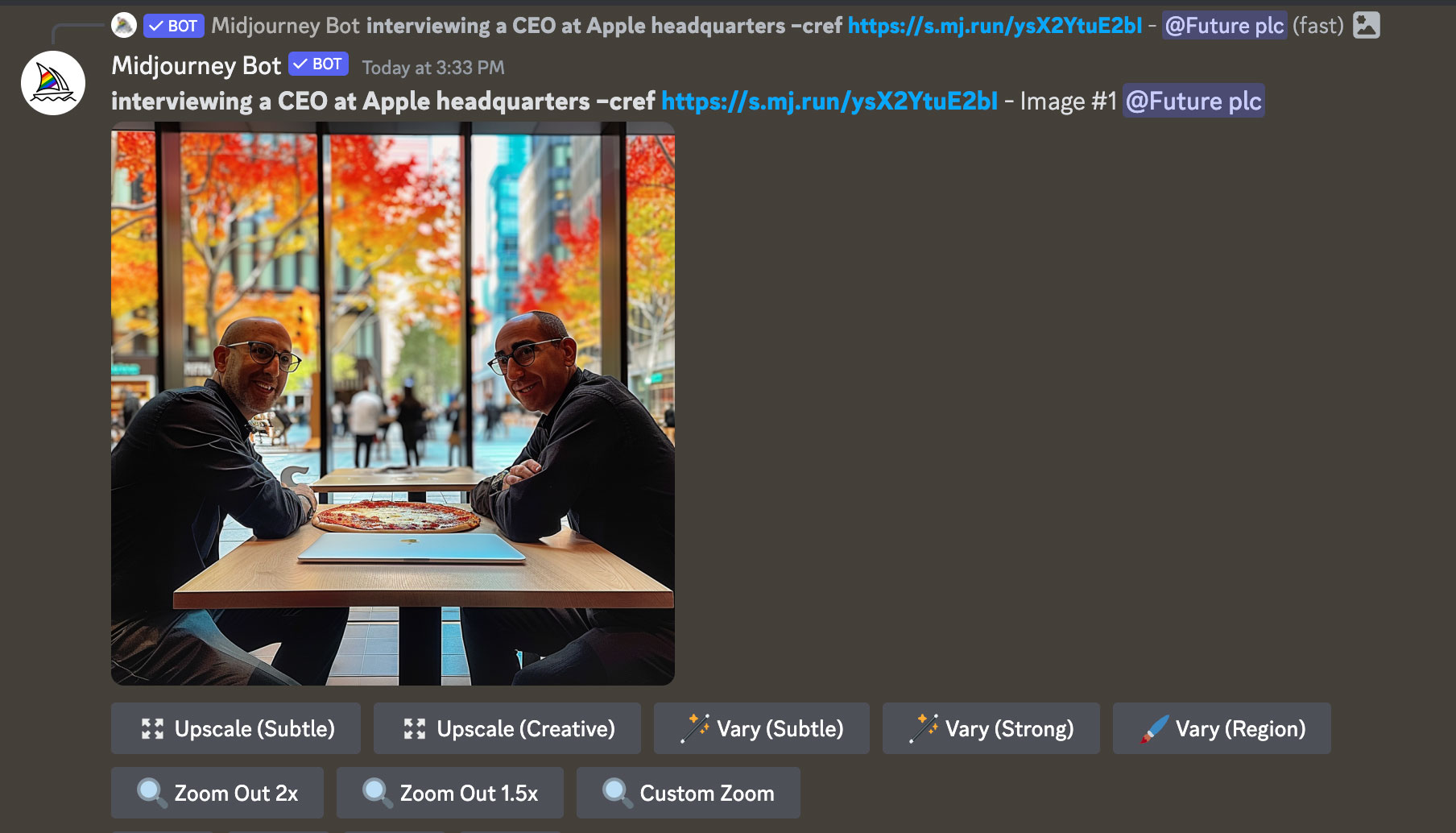

Unfortunately, when I entered this message: “interviewing Tim Cook at Apple headquarters,” it showed a grizzled-looking Apple CEO eating pizza and another image of him holding an iPad that appears to have pizza as a screen.

When I removed “Tim Cook” from the message, Midjourney was able to split my character into four images. In each one, Midjourney Me looks slightly different. However, there was one where it looked like my favorite self enjoying pizza with a “CEO” who also looked like me.

Midjourney's AI will improve and soon it will be easy to create countless images with your favorite character. It could be for comics, books, graphic novels, photo series, animations, and eventually generative videos.

Such a tool could speed up storyboarding but also make character animators very nervous.

If it's any consolation, I'm not sure Midjourney understands the difference between me, a pizza, a pizza, and an iPad, at least not yet.