Australia has the problem of slowing productivity growth. As the group tasked with tackling this issue, the Australian government's Productivity Commission is looking at AI as a potential part of the problem. The Commission recently published a three-paper report, Making the most of the AI opportunity: productivity, regulation and data access, to further explore this opportunity.

To maximize productivity gains from AI, the paper advocates a soft regulatory approach. Additionally, the Commission recommends that government departments at all levels (federal, state, and local) “lead by example” and contribute their own data and resources to promote the development of quality AI models.

Breaking down the research

The research report is divided into three separate articles.

Paper 1: AI adoption, productivity and the role of government

The first article notes that because AI is already becoming ubiquitous in some areas online and being incorporated into everyday tools, it is a technology that is already providing productivity benefits to all businesses and individuals. They are small for now but will grow over time.

While those productivity increases are interesting, the report also acknowledges that AI poses risks, particularly around consumer trust. The Commission recommends that governments can be part of the solution to this trust challenge by providing their own high-quality data to support the development of quality models. “The Government's interim data and digital strategy notes that the Australian Public Service manages a large amount of data that is not used to its full extent, and access remains restricted despite the clear benefits derived from securely sharing data between the public and private sectors,” the report notes.

Paper 2: The challenges of regulating AI

The second article looks at the benefits and risks of AI and how the Australian government should regulate it. It cites the Australian Government's interim response to the Consultation on Safe and Responsible AI in Australia as a useful starting point and the Productivity Commission document as a systematic and implementable approach to AI regulation (Figure A).

It's worth noting that Australia currently has very light regulations on AI, and the industry and public are, for the most part, looking to the soon-to-be-introduced European AI laws for guidance on the matter.

However, rather than risk regulation undermining productivity, the paper argues that the safe and ethical use of AI comes down to a number of factors such as social norms, market pressures, coding architecture and public trust. In other words, the report argues that a rigid approach to AI regulation is unlikely to address the risks, and that regulators need a more holistic approach.

Paper 3: AI increases risks to data policy

The third article notes that data has been a resource for both the public and private sectors for decades. AI has accelerated potential profits while raising risk.

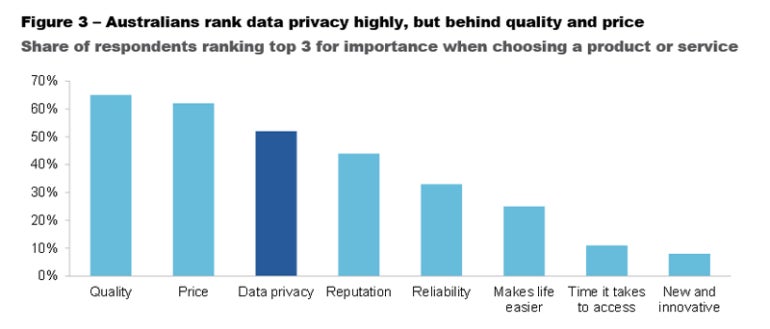

Australians know it too. As the Commission's research shows, privacy, along with quality and price, is one of the top three concerns among Australians when it comes to data (Figure B). To allay these concerns, the Commission recommends a national data strategy as preferable to forceful regulation.

“Once developed, all future regulations and guidance on data use and analysis could reference the agreed principles of the national data strategy,” the report notes. “In this way, the data strategy could provide a secure and consistent foundation for the development and use of AI and other data-intensive technologies.”

Collaboration with the government at stake

The general idea of the research is that the government should seek to be an active participant in shaping AI. The researchers argue that the government should resist instilling fear in some corners regarding AI and seize the opportunity it has to be an active part of developing best practices in AI.

For the industry, this may mean a proliferation of opportunities for the public and private sectors to come together in collaboration. Some potential opportunities include:

Industry self-regulation initiatives

Data professionals and the private sector can voluntarily adopt ethical principles, best practices and guidelines for the responsible development and use of AI and demonstrate their commitment to social values and trustworthiness. This can help reduce the need for government intervention and ensure that soft approach is recommended to the Australian government.

Co-design AI policies with stakeholders

Data professionals and the private sector can actively participate in the development of AI policies and regulations and provide their experience, knowledge and feedback to government agencies. This can help ensure that policies are based on the latest technological advances, reflect the needs and interests of various stakeholders, and strike a balance between innovation and regulation.

AI Ethics Advisory Boards

The government can be guided by AI ethics advisory boards, which can then be used as a framework for the development of any regulations. These boards can act to inform the government about risks and harms, propose mitigation strategies, and promote public awareness and commitment to AI ethics.

Adoption of AI technologies by the public sector

Creating AI solutions that improve the delivery, efficiency and transparency of public services can result in a government more familiar with the capabilities and challenges of AI. Organizations that work extensively with government agencies should consider adding AI-related accreditations to help with bidding and strategic support that the company can then provide to the government.