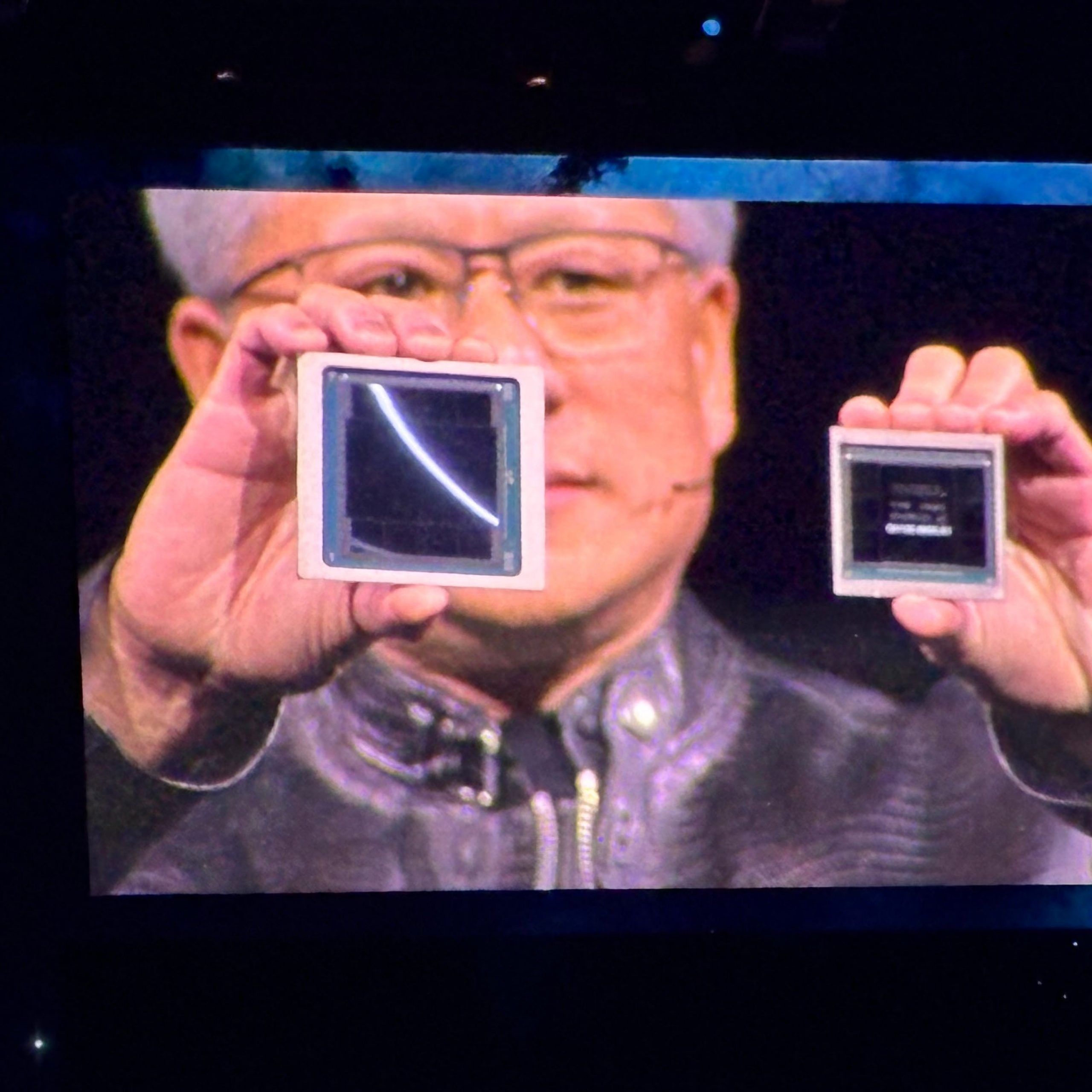

NVIDIA's newest GPU platform is Blackwell (Figure A), which companies like AWS, Microsoft, and Google plan to adopt for generative AI and other modern computing tasks, NVIDIA CEO Jensen Huang announced during the keynote speech at the NVIDIA GTC conference on March 18 in San Jose, California .

Figure A

Blackwell-based products will enter the market from NVIDIA partners worldwide in late 2024. Huang announced a long list of additional technologies and services from NVIDIA and its partners, speaking of generative AI as just one facet of accelerated computing.

“When you accelerate, your infrastructure is CUDA GPUs,” Huang said, referring to CUDA, NVIDIA's parallel computing platform and programming model. “And when that happens, it's the same infrastructure as for generative AI.”

Blackwell enables inference and training of large language models

Blackwell's GPU platform contains two dies connected by a 10-terabyte-per-second chip-to-chip interconnect, meaning each side can essentially operate as if “the two dies think it's a single chip,” Huang said. It has 208 billion transistors and is manufactured using NVIDIA's 208 billion TSMC 4NP process. It features 8TB/S memory bandwidth and 20 pentaFLOPS of AI performance.

For enterprises, this means Blackwell can perform training and inference for AI models scaling up to 10 trillion parameters, NVIDIA said.

Blackwell is enhanced by the following technologies:

- The second generation of TensorRT-LLM and NeMo Megatron, both from NVIDIA.

- Frameworks to double the calculation and model size compared to the first generation transformer engine.

- Confidential computing with native interface encryption protocols for privacy and security.

- A dedicated decompression engine to speed up database queries in data analytics and data science.

As for security, Huang said the reliability engine “performs a self-test, an in-system test, of every bit of memory on the Blackwell chip and all memory connected to it. “It's like we shipped the Blackwell chip with its own tester.”

Blackwell-based products will be available through partner cloud service providers, NVIDIA Cloud Partner Program companies, and select sovereign clouds.

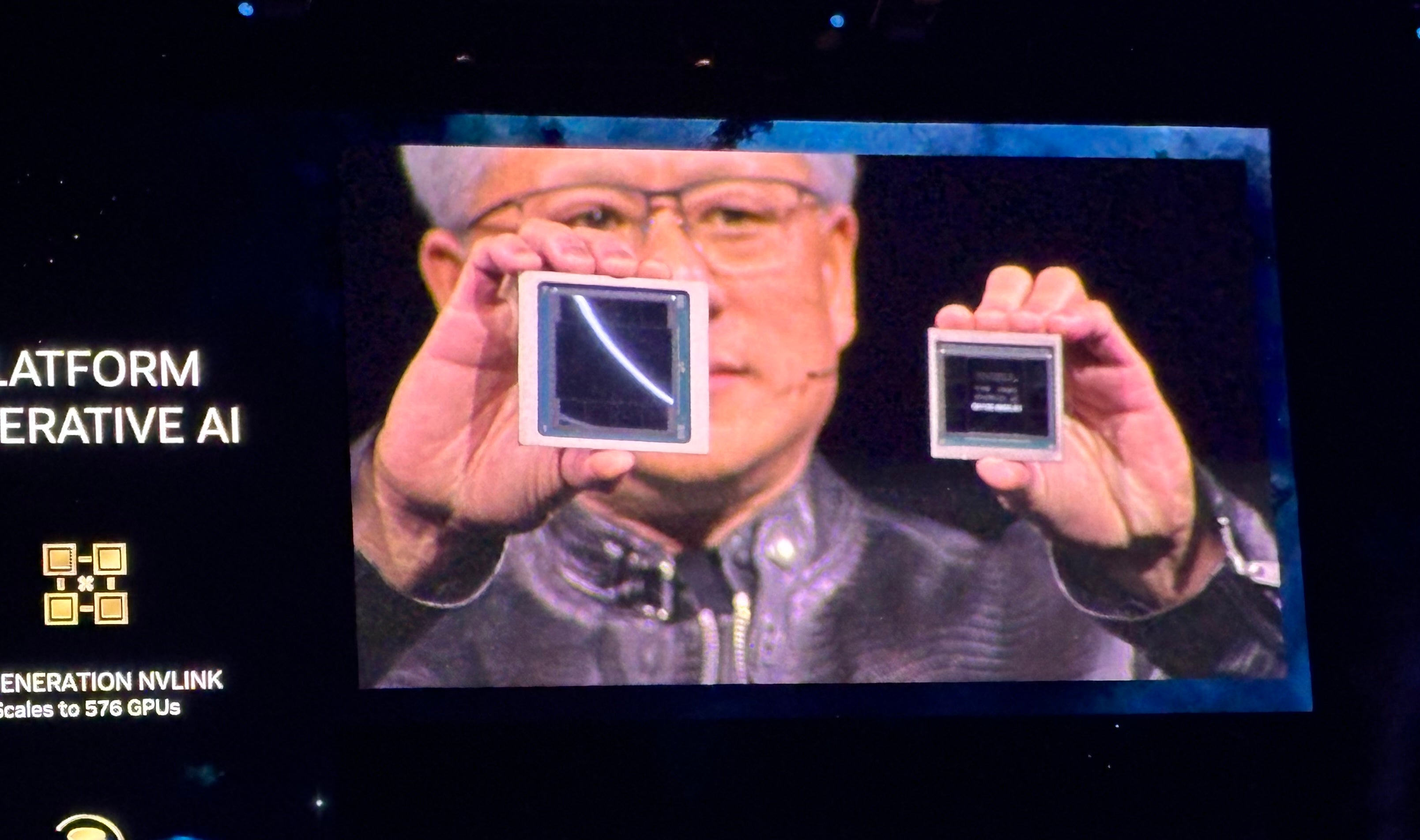

The Blackwell GPU lineup follows the Grace Hopper GPU lineup, which debuted in 2022 (Figure B). NVIDIA says Blackwell will run real-time generative AI on trillion-parameter LLM at 25x lower cost and lower power consumption than the Hopper line.

Figure B

NVIDIA GB200 Grace Blackwell Superchip connects multiple Blackwell GPUs

Along with Blackwell GPUs, the company announced the NVIDIA GB200 Grace Blackwell Superchip, which links two NVIDIA B200 Tensor Core GPUs to the NVIDIA Grace CPU, providing a new combined platform for LLM inference. The NVIDIA GB200 Grace Blackwell Superchip can be linked with the company's recently announced NVIDIA Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms for speeds up to 800 GB/S.

The GB200 will be available on NVIDIA DGX Cloud and through AWS, Google Cloud, and Oracle Cloud Infrastructure instances later this year.

New server design looks toward billion-parameter AI models

The GB200 is a component of the recently announced GB200 NVL72, a rack-scale server design that includes 36 Grace CPUs and 72 Blackwell GPUs for 1.8 exaFLOPs of AI performance. NVIDIA expects potential use cases for massive trillion-parameter LLMs, including conversational persistent memory, complex scientific applications, and multimodal models.

The GB200 NVL72 combines the fifth generation of NVLink connectors (5000 NVLink cables) and the GB200 Grace Blackwell superchip for a massive amount of computing power that Huang calls “an exoflops AI system in a single rack.”

“That's more than the average Internet bandwidth… we could basically send everything to everyone,” Huang said.

“Our goal is to continuously reduce the cost and energy (they are directly related to each other) of computing,” Huang said.

Two liters of water per second are needed to cool the GB200 NVL72.

Next Generation NVLink Delivers Accelerated Data Center Architecture

The 5th generation of NVLink provides 1.8 TB/s bi-directional performance per GPU communication between up to 576 GPUs. This iteration of NVLink is intended for use in the most powerful complex LLMs available today.

“In the future, data centers will be regarded as an artificial intelligence factory,” Huang said.

Introducing NVIDIA Inference Microservices

Another element of the potential “AI factory” is NVIDIA's Inference Microservice, or NIM, which Huang described as “a new way of receiving and packaging software.”

NIMs, which NVIDIA uses internally, are containers with which to train and deploy generative AI. NIMs allow developers to use APIs, NVIDIA CUDA, and Kubernetes in a single package.

SEE: Python remains the most popular programming language according to the TIOBE Index. (Technological Republic)

Instead of writing code to program an AI, Huang said, developers can “put together a team of AIs” to work on the process within the NIM.

“We want to build chatbots (AI co-pilots) that work alongside our designers,” Huang said.

NIMs will be available starting March 18. Developers can experiment with NIMs at no cost and run them through an NVIDIA AI Enterprise 5.0 subscription.

Other important NVIDIA announcements at GTC 2024

Huang announced a wide range of new products and services in accelerated computing and generative artificial intelligence during the NVIDIA GTC 2024 keynote.

NVIDIA announced cuPQC, a library used to accelerate post-quantum cryptography. Developers working on post-quantum cryptography can contact NVIDIA for updates on availability.

NVIDIA's X800 series of network switches accelerate AI infrastructure. Specifically, the X800 switches will be available in 2025.

Key partnerships detailed during NVIDIA's presentation include:

- NVIDIA's full-stack AI platform will be on Oracle's Enterprise AI starting March 18.

- AWS will provide access to Amazon EC2 instances based on NVIDIA Grace Blackwell GPUs and NVIDIA DGX Cloud with Blackwell security.

- NVIDIA will accelerate Google Cloud with the NVIDIA Grace Blackwell AI computing platform and NVIDIA DGX Cloud service coming to Google Cloud. Google has not yet confirmed an availability date, although it will likely be late 2024. Additionally, the DGX Cloud platform powered by NVIDIA H100 will be generally available on Google Cloud starting March 18.

- Oracle will use NVIDIA Grace Blackwell in its OCI Supercluster, OCI Compute and NVIDIA DGX Cloud in Oracle Cloud Infrastructure. Some combined Oracle and NVIDIA sovereign AI services are available starting March 18.

- Microsoft will adopt the NVIDIA Grace Blackwell superchip to accelerate Azure. Availability can be expected later in 2024.

- Dell will use NVIDIA's AI infrastructure and software suite to create Dell AI Factory, an end-to-end enterprise AI solution, available starting March 18 through traditional channels and Dell APEX. At an undisclosed point in the future, Dell will use the NVIDIA Grace Blackwell superchip as the basis for a rack-scale, high-density, liquid-cooling architecture. The Superchip will be compatible with Dell's PowerEdge servers.

- SAP will add NVIDIA recovery augmented generation capabilities to its Joule copilot. Additionally, SAP will use NVIDIA NIM and other joint services.

“The entire industry is preparing for Blackwell,” Huang said.

Competitors to NVIDIA AI chips

NVIDIA primarily competes with AMD and Intel when it comes to providing enterprise AI. Qualcomm, SambaNova, Groq, and a wide variety of cloud service providers play in the same space when it comes to generative AI inference and training.

AWS has its own inference and training platforms: Inferentia and Trainium. In addition to partnering with NVIDIA on a wide variety of products, Microsoft has its own AI training and inference chip: the Maia 100 AI Accelerator on Azure.

Disclaimer: NVIDIA paid for my airfare, lodging, and some meals for the NVIDIA GTC event held March 18-21 in San Jose, California.