Days after Vice President Kamala Harris launched her presidential bid, a video created with the help of artificial intelligence went viral.

“I… am your Democratic nominee for president because Joe Biden finally exposed his senility at the debate,” said a voice that sounded like Harris’s in the fake audio track used to alter one of her campaign ads. “You chose me because I am the most diverse hire.”

Billionaire Elon Musk, who has supported Harris's Republican opponent, former President Trump, shared the video on X, and two days later clarified that it was actually a parody. His initial tweet had 136 million views. The next tweet, in which he called the video a parody, got 26 million views.

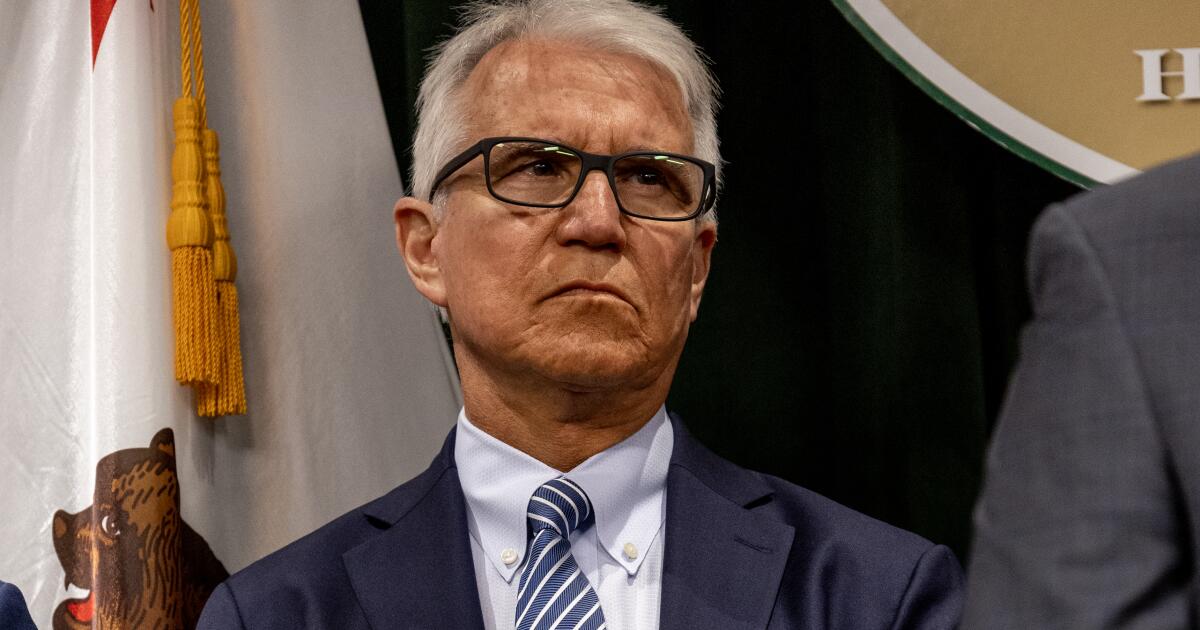

For Democrats, including California Gov. Gavin Newsom, the incident was no laughing matter and fueled calls for more regulation to combat AI-generated videos with political messages and a renewed debate over the proper role of government in trying to contain the emerging technology.

On Friday, California lawmakers gave final approval to a bill that would ban the distribution of misleading campaign ads, or “electioneering communication,” within 120 days of an election. Assembly Bill 2839 targets manipulated content that would harm a candidate’s reputation or electoral prospects, along with confidence in the outcome of an election. It’s meant to address videos like the one Musk shared of Harris, though it includes an exception for parody and satire.

“We’re seeing California gear up for its first election where misinformation driven by generative AI will pollute our information ecosystems like never before and millions of voters won’t know which images, audio or video they can trust,” said Assemblywoman Gail Pellerin (D-Santa Cruz). “So we have to do something.”

Newsom has signaled he will sign the bill, which would take effect immediately, in time for the November election.

The legislation updates a California law that prohibits people from distributing misleading audiovisual material that is intended to damage a candidate’s reputation or mislead a voter within 60 days of an election. State lawmakers say the law needs to be strengthened during an election cycle when people are already flooding social media with digitally altered videos and photos, known as deepfakes.

The use of deepfakes to spread misinformation has worried lawmakers and regulators during previous election cycles. These fears increased after the release of new AI-powered tools, such as chatbots that can quickly generate images and videos. From fake robocalls to fake celebrity endorsements of candidates, AI-generated content is putting tech platforms and lawmakers to the test.

Under AB 2839, a candidate, election committee, or election official could seek a court order to have deepfakes removed. They could also sue the person who distributed or republished the misleading material for damages.

The legislation also applies to misleading media published 60 days after an election, including content that falsely portrays a voting machine, ballot, polling place, or other election-related property in a manner likely to undermine confidence in the election outcome.

It does not apply to satire or parody labeled as such, nor to broadcast stations if they inform viewers that what is depicted does not accurately represent a speech or event.

Tech industry groups oppose AB 2839, along with other bills that target online platforms for failing to adequately moderate misleading election content or label AI-generated content.

“This will result in blocking and inhibiting constitutionally protected free speech,” said Carl Szabo, vice president and general counsel of NetChoice. The group’s members include Google, X and Snap, as well as Facebook’s parent company Meta and other tech giants.

Online platforms have their own rules about manipulated media and political ads, but their policies may differ.

Unlike Meta and X, TikTok doesn’t allow political ads and says it can remove even content labeled as AI-generated if it features a public figure, such as a celebrity, “when used for political or commercial endorsements.” Truth Social, a platform created by Trump, doesn’t address manipulated media in its rules about what isn’t allowed on its platform.

Federal and state regulators are already cracking down on AI-generated content.

In May, the Federal Communications Commission proposed a $6 million fine against Steve Kramer, a Democratic political consultant who placed a robocall that used artificial intelligence to impersonate President Biden. The fake call discouraged participation in New Hampshire’s Democratic presidential primary in January. Kramer, who told NBC News he planned the call to draw attention to the dangers of artificial intelligence in politics, also faces criminal charges of voter suppression and impersonating a candidate.

Szabo said current laws are sufficient to address concerns about election fraud. NetChoice has sued several states to stop some laws aimed at protecting children on social media, claiming they violate First Amendment protections for free speech.

“Simply creating a new law does nothing to stop bad behavior; you actually need to enforce the laws,” Szabo said.

More than two dozen states, including Washington, Arizona and Oregon, have enacted, passed or are working on laws to regulate deepfakes, according to the nonprofit consumer advocacy group Public Citizen.

In 2019, California instituted a law aimed at combating media manipulation after a video of House Speaker Nancy Pelosi appearing drunk went viral on social media. Enforcing that law has been a challenge.

“We had to water it down,” said Assemblyman Marc Berman (D-Menlo Park), the bill’s author. “It brought a lot of attention to the potential risks of this technology, but I was concerned that it would ultimately not do much good.”

Instead of taking legal action, said Danielle Citron, a professor at the University of Virginia School of Law, political candidates could choose to debunk a deepfake or even ignore it to limit its spread. By the time they can get through the court system, the content may have already gone viral.

“These laws are important because of the message they send. They teach us something,” he said, adding that they inform people who share deepfakes that there are costs.

This year, lawmakers worked with the California Technology and Democracy Initiative, a project of the nonprofit California Common Cause, on several bills to address political fakery.

Some are attacking online platforms that are protected by federal law from being held liable for content posted by users.

Berman introduced a bill requiring an online platform with at least one million users in California to remove or label certain misleading election-related content within 120 days of an election. Platforms would have to take action no later than 72 hours after a user reports the post. Under AB 2655, which passed the Legislature on Wednesday, platforms would also need procedures to identify, remove and label false content. It also doesn’t apply to parody or satire or to news outlets that meet certain requirements.

Another bill, co-authored by Assemblywoman Buffy Wicks (D-Oakland), requires online platforms to label AI-generated content. While NetChoice and TechNet, another industry group, oppose the bill, OpenAI, the creator of ChatGPT, supports AB 3211, Reuters reported.

However, both bills would not take effect until after the election, underscoring the challenges of passing new laws as technology advances rapidly.

“Part of my hope with the bill being introduced is the attention it generates and hopefully the pressure it puts on social media platforms to behave correctly now,” Berman said.