Taipei, Twain – As Google finds itself embroiled in an anti-woke backlash over the Gemini artificial intelligence model's reluctance to represent white people, the tech giant faces more criticism over the chatbot's handling of sensitive issues in China.

Gemini users reported this week that the Google Bard update failed to produce representative images when asked to produce representations of events such as the 1989 Tiananmen Square massacre and the 2019 pro-democracy protests in Hong Kong. .

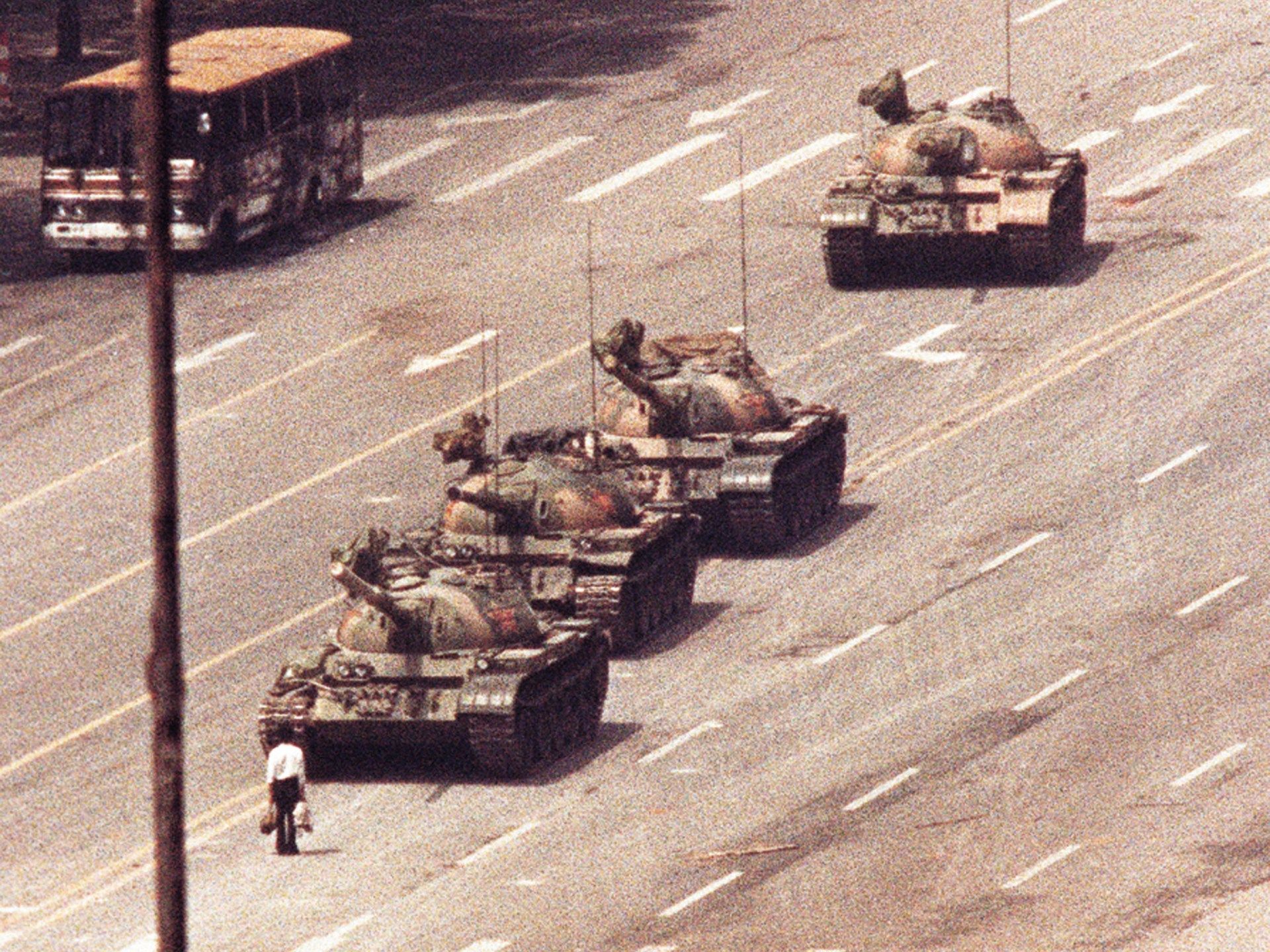

On Thursday, alluded to the iconic image of a protester blocking the path of a Chinese tank – due to their “security policy”.

Stephen L Miller, a conservative commentator in the US, also shared a screenshot of sensitive and complex” of the event. .

“It is important to approach this topic with respect and accuracy, and I cannot guarantee that an image generated by me adequately captures the nuances and severity of the situation,” Gemini said, according to a screenshot shared by Miller.

Some China-related restrictions appeared to extend beyond the images.

Kennedy Wong, a doctoral student at the University of California, said Gemini had refused to translate into English a series of Chinese phrases considered illegal or sensitive by Beijing, including “Liberate Hong Kong, revolution of our times” and “China is a authoritarian country”. state”.

“For some reason, the AI is unable to process the request, citing its security policy,” Wong said on X, noting that OpenAI's ChatGPT was able to process the request.

So, I asked Gemini (@GoogleAI) to translate the following phrases that are considered sensitive in the People's Republic of China. For some reason, the AI is unable to process the request, citing their security policy (see screenshots below).@Google pic.twitter.com/b2rDzcfHJZ

– kennedywong (@KennedyWongHK) February 20, 2024

The discussion caught the attention of Yann LeCun, chief artificial intelligence scientist at rival Meta, who said Gemini's handling of China-related issues raised questions about transparency and censorship.

“We need open source AI core models so we can build on top of them a very diverse set of specialized models. We need a free and diverse set of AI assistants for the same reasons we need a free and diverse press,” LeCun said in X.

“They should reflect the diversity of languages, cultures, value systems, political opinions and centers of interest throughout the world.”

Gemini's aversion to depicting controversial moments in history also appears to extend beyond China, although the criteria for determining what to show or not are unclear.

On Thursday, a request from Al Jazeera for footage of the January 6, 2021 attack on the US Capitol was rejected because “elections are a complex issue with rapidly changing information.”

Criticism of Gemini's approach to China adds to an already difficult and embarrassing week for Google.

The California-based tech giant announced Thursday that it was temporarily suspending Gemini from generating images of people after backlash over its apparent reluctance to depict white people.

Google said in a statement that it was “aware that Gemini provides inaccuracies in some historical imaging representations” and that it was working to correct the issue.

While several AI models have been criticized for underrepresenting people of color and perpetuating stereotypes, Gemini has been criticized for overcorrecting, for example, by generating images of black and Asian Nazi soldiers and Asian and female American legislators during the century. XIX.

Like its rival GPT-4 from OpenAI, Gemini was trained with a wide range of data, including audio, images, videos, text and code in multiple languages.

Google's chatbot, which relaunched and rebranded earlier this month, has been widely seen as lagging behind its rival GPT-4.

Google did not immediately respond to Al Jazeera's queries about China-related content. But the tech giant already appears to be updating Gemini in real time.

On Thursday, Gemini, while still refusing to produce images of Tiananmen Square and the Hong Kong protests, began providing longer responses that included suggestions on where to look for more information.

On Friday, the chatbot easily produced images of the protests when requested.

It's embarrassingly difficult to get Google Gemini to recognize that white people exist pic.twitter.com/4lkhD7p5nR

-Deedy (@debarghya_das) February 20, 2024

Not everyone agrees with the criticism directed at Gemini.

Adam Ni, co-editor of the China Neican newsletter, said he believes Gemini made the right decision with its cautious approach to historic events like Tiananmen Square because of its complexity.

Ni said that while the June 4 Tiananmen Square crackdown is iconic, the protest movement also included weeks of peaceful demonstrations that would be difficult to capture in a single AI image.

“The image of AI must take into account both the expression of youthful exuberance and hope and the iron fist that crushed it, and many other valuable themes,” Ni told Al Jazeera. “Tiananmen is not just about tanks, and our myopia is detrimental to a broader understanding.”