- Liquid cooling is no longer optional, it is the only way to survive the thermal attack of AI

- The 400VDC jump borrows a lot from the supply chains of electric vehicles and design logic

- Google TPU supercomputers are now executed at the Gigawatt scale with an activity time of 99.999%

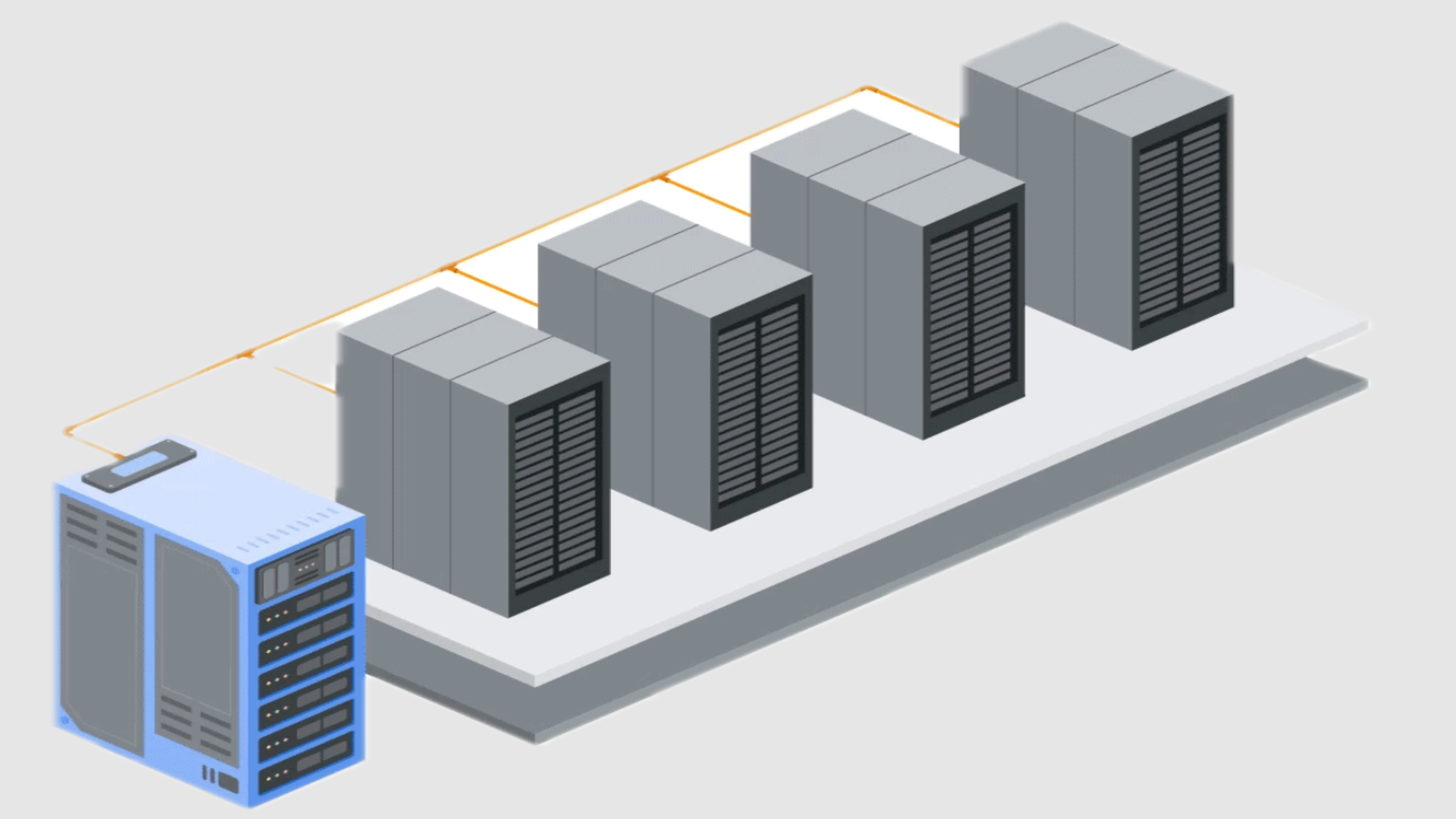

As the demand for artificial intelligence work loads intensifies, the physical infrastructure of the data centers is experiencing a rapid and radical transformation.

The tastes of Google, Microsoft and Meta are now based on technologies initially developed for electric vehicles (EV), particularly 400 VDC, to address the dual challenges of high density energy delivery and thermal management.

The emerging vision is of central data racks capable of delivering up to 1 megavatio of power, combined with liquid cooling systems designed to administer the resulting heat.

Borrow EV technology for the evolution of the data center

The change to the power distribution of 400VDC marks a decisive breakdown of the inherited systems. Google previously defended the 12VDC industry movement to 48VDC, but the current transition to +/- 400VDC is being enabled by EV supply chains and is promoted by necessity.

The Mt. Diablo initiative, compatible with goal, Microsoft and the Open computing project (OCP), aims to standardize interfaces at this voltage level.

Google says that this architecture is a pragmatic movement that releases valuable space on the shelf to calculate resources by decoutering the power delivery of the shelves through Sidecar units from AC to DC. It also improves efficiency in approximately 3%.

Cooling, however, has become an equally pressing problem. With next -generation chips that consume more than 1,000 watts each, the cooling of traditional air is quickly becoming obsolete.

Liquid cooling has emerged as the only scalable solution to handle heat in high density computer environments.

Google has adopted this approach with large -scale implementations; Its tpu pods refrigerated by liquids now operate at the Gigawatt scale and have delivered an activity time of 99,999% in the last seven years.

These systems have replaced large heat dissipators with compact cold plates, effectively reducing the physical footprint of the server hardware and the computing computing density compared to the previous generations.

However, despite these technical achievements, skepticism is justified. The impulse towards the racks of 1MW is based on the assumption of the continuous demand for increase, a trend that may not materialize as expected.

Although the Google roadmap highlights the growing energy needs of the AI, which projects more than 500 kW per Rack by 2030, it remains uncertain if these projections will be maintained throughout the wider market.

It is also worth noting that the integration of technologies related to EV in data centers brings not only efficiency gains but also new complexities, particularly in relation to the safety and capacity of service to high voltages.

However, the collaboration between Hipperscalers and the open hardware community indicates a shared recognition that existing paradigms are no longer enough.

Through Storagereview