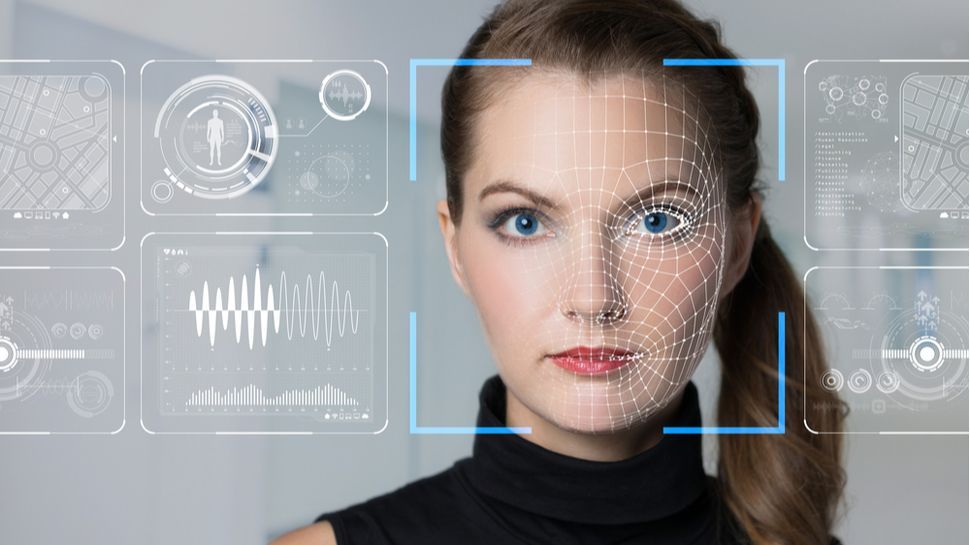

From the now infamous Mother’s Day photo taken from Kensington Palace to the fake audio of Tom Cruise dissing the Olympic Committee, AI-generated content has been in the news recently for all the wrong reasons. These cases are sparking widespread controversy and paranoia, and causing people to question the authenticity and origin of the content they see online.

It is affecting every corner of society – not just public figures and regular internet users, but also the world’s largest companies. Chase Bank, for example, reported being fooled by a deepfake during an internal experiment. Meanwhile, a report revealed that in just one year, deepfake incidents skyrocketed in the fintech sector by 700%.

There is currently a critical lack of transparency around AI, including whether or not an image, video, or voice was generated by AI. Efficient methods of auditing AI, allowing for a higher level of accountability and incentivizing companies to more aggressively root out misleading content, are still being developed. These shortcomings combine to exacerbate the problem of trust in AI, and combating these challenges depends on bringing more clarity to AI models. It’s a major hurdle for companies looking to harness the enormous value of AI tools but concerned that the risk may outweigh the reward.

CEO and founder of Casper Labs.

Can business leaders trust AI?

All eyes are on AI right now. But while this technology has seen historic levels of innovation and investment, trust in AI and many of the companies behind it has steadily declined. Not only is it becoming harder to distinguish human-generated from AI-generated content online, but business leaders are also increasingly wary of investing in their own AI systems. There is a common struggle to ensure the benefits outweigh the risks, all compounded by a lack of clarity about how the technology actually works. It is often unclear what type of data is used to train models, how the data affects the results generated, and what the technology does with a company’s proprietary data.

This lack of visibility presents a number of legal and security risks for business leaders. Despite AI budgets predicted to increase by up to five times this year, rising cybersecurity concerns have resulted in 18.5% of all AI or ML transactions in the enterprise being blocked. This is a massive 577% increase in just nine months, with the largest example of this (37.16%) occurring in finance and insurance – sectors that have particularly stringent legal and security requirements. Finance and insurance are harbingers of what could happen in other sectors as questions about AI security and legal risks increase and businesses have to consider the implications of using the technology.

While we are eager to harness the $15.7 trillion in value that AI could generate by 2030, it is clear that businesses cannot fully rely on AI right now, and this obstacle could only get worse if the issues are not addressed. There is a pressing need to introduce greater transparency into AI to make it easier to determine when content is AI-generated or not, to see how AI systems use data, and to better understand the results. The big question is how this is achieved. Transparency and loss of trust in AI are complex problems with no single, conclusive solution, and progress will require collaboration across sectors around the world.

Addressing a complex technical challenge

Fortunately, we have already seen signs that both governments and technology leaders are focused on tackling the problem. The recent EU AI Law is an important first step in establishing regulatory guidelines and requirements around responsible AI deployment, and in the US, states such as California have taken steps to introduce their own legislation.

While these laws are valuable because they outline specific risks for industry use cases, they only provide standards to be followed, not solutions to be implemented. The lack of transparency in AI systems is profound, affecting the data used to train models and how that data informs outcomes, and poses a thorny technical problem.

Blockchain is one technology that is emerging as a potential solution. While blockchain is widely associated with cryptocurrencies, at its core, the foundational technology relies on a highly serialized and tamper-proof data store. For AI, it can increase transparency and trust by providing an automated, certifiable audit trail of AI data – from the data used to train AI models to the inputs and outputs during use and even the impact specific data sets have had on an AI’s outcome.

Retrieval Augmented Generation (RAG) has also emerged rapidly and is being adopted by AI leaders to bring transparency to systems. RAG allows AI models to search external data sources, such as the internet or a company’s internal documents, in real-time to report results, meaning models can ensure that results are based on the most relevant and up-to-date information possible. RAG also introduces the ability for a model to cite its sources, allowing users to verify information on their own rather than having to blindly trust it.

As for fighting deepfakes, OpenAI said in February that it would embed metadata into images generated in ChatGPT and its API to make it easier for social platforms and content distributors to detect them. The same month, Meta announced a new approach to identifying and labeling AI-generated content on Facebook, Instagram, and Threads.

These new regulations, governance technologies, and standards are a great first step in fostering greater trust around AI and paving the way for responsible adoption. But there is much more work to be done in the public and private sector, particularly in light of the viral moments that have heightened public unease with AI, upcoming elections around the world, and growing concerns about AI safety in the enterprise.

We are reaching a pivotal moment in the AI adoption journey, where trust in the technology has the power to tip the balance. Only with greater transparency and trust will businesses embrace AI and their customers reap its benefits in AI-powered products and experiences that create delight, not discomfort.

We list the best AI website builders.

This article was produced as part of TechRadarPro's Expert Insights channel, where we showcase the brightest and brightest minds in the tech industry today. The views expressed here are those of the author, and not necessarily those of TechRadarPro or Future plc. If you're interested in contributing, find out more here: