Edge AI inference refers to running trained machine learning (ML) models closer to end users compared to traditional cloud AI inference. Edge inference accelerates the response time of ML models, enabling real-time AI applications in industries such as gaming, healthcare, and retail.

What is AI inference at the edge?

Before we look at AI inference specifically at the edge, it’s worth understanding what AI inference is in general. In the AI/ML development lifecycle, inference is when a trained ML model performs tasks on new, never-before-seen data, such as making predictions or generating content. AI inference happens when end users directly interact with an ML model embedded in an application. For example, when a user enters a message into ChatGPT and gets a response, the moment ChatGPT is “thinking” is when inference occurs, and the output is the result of that inference.

Edge AI inference is a subset of AI inference whereby an ML model runs on a server close to end users, for example in the same region or even the same city. This proximity reduces latency to milliseconds for faster model response, which is beneficial for real-time applications such as image recognition, fraud detection, or game map generation.

Head of AI Product at Gcore.

How AI inference at the edge relates to edge AI

Edge AI inference is a subset of edge AI. Edge AI involves processing data and running ML models closer to the data source rather than in the cloud. Edge AI includes everything related to edge AI computing, from edge servers (the metro edge) to IoT devices and telecom base stations (the far edge). Edge AI also includes training at the edge, not just inference. In this article, we will focus on AI inference on edge servers.

Comparison between edge inference and cloud inference

With cloud AI inference, an ML model runs on the remote cloud server, and user data is sent and processed in the cloud. In this case, an end user can interact with the model from a different region, country, or even continent. As a result, latency for cloud inference ranges from hundreds of milliseconds to seconds. This type of AI inference is suitable for applications that do not require local data processing or low latency, such as ChatGPT, DALL-E, and other popular GenAI tools. Edge inference differs in two related ways:

- Inference happens closer to the end user

- Latency is lower

How AI Inference Works at the Edge

AI inference at the edge relies on an IT infrastructure with two main architectural components: a low-latency network and servers powered by AI chips. If you need scalable AI inference that can handle load spikes, you also need a container orchestration service, such as Kubernetes, which runs on edge servers and enables your ML models to scale up or down quickly and automatically. Today, only a few vendors have the infrastructure to offer global AI inference at the edge that meets these requirements.

Low latency network:A vendor offering AI inference at the edge should have a distributed network of points of presence (PoPs) at the edge where the servers are located. The more PoPs at the edge, the faster the network round-trip time, meaning ML model responses are produced faster for end users. A vendor should have dozens (or even hundreds) of PoPs around the world and should offer intelligent routing, which directs the user’s request to the nearest edge server to use the globally distributed network efficiently and effectively.

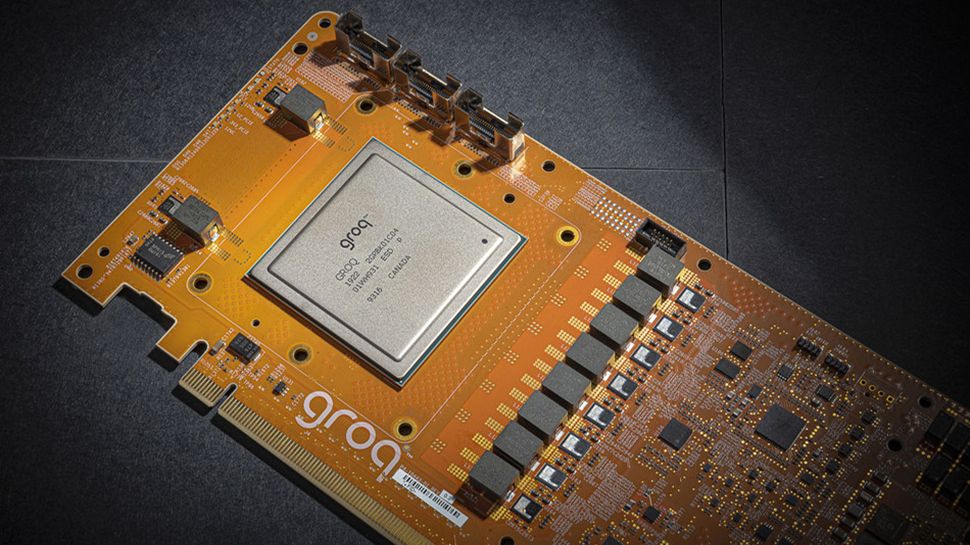

Servers with AI accelerators:To reduce computation time, you should run your ML model on a server or virtual machine that has an AI accelerator, such as the NVIDIA GPU. There are GPUs designed specifically for AI inference. For example, one of the latest models, the NVIDIA L40S, has up to 5x faster inference performance than the A100 and H100 GPUs, which are primarily designed for training large ML models but are also used for inference. The NVIDIA L40S GPU is currently the best AI accelerator for AI inference.

Container orchestration:Deploying ML models in containers allows the models to be scalable and portable. A vendor can manage an underlying container orchestration tool on your behalf. In that setup, an ML engineer looking to integrate a model into an application would simply upload a container image with an ML model and get a ready-to-use ML model endpoint. When a spike in load occurs, the containers with your ML model will automatically scale up and then scale back down when the load decreases.

Key benefits of AI inference at the edge

AI inference at the edge offers three key benefits across all industries or use cases: low latency, security and sovereignty, and cost-effectiveness.

Low latency

The lower the network latency, the faster the model will respond. If a vendor’s average network latency is less than 50ms, it’s suitable for most applications that require a near-instant response. In comparison, cloud latency can be up to a few hundred milliseconds, depending on your location relative to the cloud server. That’s a noticeable difference for an end user, as cloud latency can lead to frustration as end users must wait for AI responses.

Keep in mind that a low-latency network only takes into account the travel time of the data. A network latency of 50 ms doesn’t mean that users will get an AI result in 50 ms — you need to add in the time it takes for the ML model to perform the inference. That ML model processing time depends on the model being used and can represent the majority of the processing time for end users. That’s all the more reason to make sure you’re using a low-latency network, so that your users get the best possible response time while ML model developers continue to improve the model’s inference speed.

Security and sovereignty

Keeping data at the edge (i.e. where your users are) makes it easier to comply with local laws and regulations, such as the GDPR and its equivalents in other countries. An edge inference provider should configure its inference infrastructure to comply with local laws and ensure that you and your users are adequately protected.

Edge inference also increases the confidentiality and privacy of end-user data because it is processed locally instead of being sent to remote cloud servers. This reduces the attack surface and minimizes the risk of data exposure during transmission.

Cost efficiency

Typically, a vendor charges only for the computational resources used by the ML model. This, coupled with carefully configured autoscaling and model execution schedules, can significantly reduce inference costs. Who should use AI inference at the edge?

Below are some common scenarios where edge inference would be the optimal choice:

- Low latency is critical for your application and your users. A wide range of real-time applications, from facial recognition to business analytics, require low latency. Edge inference offers the lowest latency inference option.

- Your user base is distributed across multiple geographic locations. In this case, you need to provide the same user experience (i.e., the same low latency) to all your users regardless of their location. This requires a globally distributed edge network.

- You don't want to deal with infrastructure maintenance. If AI and cloud infrastructure support is not part of your core business, it may be worth outsourcing these processes to a skilled and experienced partner. This way, you can focus your resources on developing your application.

- You want to keep your data local, for example within the country where it was generated. In this case, you need to perform AI inferences as close to your end users as possible. A globally distributed edge network can meet this need, while the cloud is unlikely to offer the degree of distribution you need.

Which industries benefit from AI inference at the edge?

AI inference at the edge benefits any industry where AI/ML is used, but especially those developing real-time applications. In the technology sector, this would include generative AI applications, chatbots and virtual assistants, data augmentation, and AI tools for software engineers. In the gaming sector, this would be AI content and map generation, real-time player analysis, and real-time AI bot personalization and conversation. For the retail market, typical applications would be smart supermarkets with self-checkout and merchandising, virtual try-on and content generation, predictions, and recommendations.

In the manufacturing sector, the benefits are real-time defect detection in production processes, VR/VX applications and rapid feedback response, while in the media and entertainment sector, the benefits would be content analysis, real-time translation and automatic transcription. Another sector developing real-time applications is the automotive sector, and in particular rapid response for autonomous vehicles, vehicle personalisation, advanced driver assistance and real-time traffic updates.

Conclusion

For organizations looking to deploy real-time applications, AI inference at the edge is an essential component of their infrastructure. It significantly reduces latency, ensuring ultra-fast response times. For end users, this means a seamless and more engaging experience, whether playing online games, using chatbots, or shopping online with a virtual try-on service. Improved data security means businesses can deliver superior AI services while protecting user data. AI inference at the edge is a critical enabler for deploying AI/ML production at scale, driving AI/ML innovation and efficiency across numerous industries.

We list the best bare metal hosting.

This article was produced as part of TechRadarPro's Expert Insights channel, where we showcase the brightest and brightest minds in the tech industry today. The views expressed here are those of the author, and not necessarily those of TechRadarPro or Future plc. If you're interested in contributing, find out more here: