Was I imagining things or did it seem like the iPhone 15 Pro's screen was distorting every time someone invoked the new Siri? No. It's a smart screen animation that's part of Apple's upcoming Apple Intelligence integration with iOS 18, covering everything from the Notes and Messaging apps to Siri and the powerful new Photos tools.

Apple unveiled its new set of Apple Intelligence capabilities on Monday at WWDC 2024 during a fast-paced keynote in which it was difficult to keep track of all the new features, artificial intelligence, platforms and app updates.

Now, however, I've seen some of these new features up close and noticed a few surprises, interesting options, and one or two limitations that might frustrate consumers. Of course, Apple's work on Apple Intelligence is just beginning, and what I've seen will likely look a little different when it hits iOS 18, iPadOS 18, and macOS Sequoia. Public betas aren't even expected until next month.

The new Siri makes it different

But back to that new interaction with Siri. The update arrives with iOS 18, but only those with A17 Pro iPhones inside (specifically the iPhone 15 Pro and Pro Max) and all M-class Macs and iPads will be able to experience it. Now that I've seen it in action up close, it seems a real shame. As Apple's Craig Federighi explained, older Apple mobile CPUs simply don't have enough power.

Nothing changes about how you activate Siri. You can call it by name (with or without “Hey”) or press the power/sleep button. Often, that motion feels like you're squeezing the phone, and the new Siri understands that. Pressing the button distorts the black bezel of the screen. It flexes, so it looks like you're really squishing the entire phone. At the same time, the bezel lights up with iridescent colors that do not flash randomly. Instead, the glow responds to your voice. However, those animations will not appear on iPhones that do not support Apple Intelligence.

Apple's intelligence allows the new Siri to carry on a conversation that at least provides a contextual clue. Ask him how your favorite team is doing and he can talk (in the demo I saw, he didn't talk). It can also show you, for example, the Mets' current MLB standings (not good). The follow-up query could simply be asking about the upcoming game without mentioning the team or that you want to know something about the Mets. If there's a game you want to attend, you can ask Siri to add it to your calendar. Again, there is no mention of the Mets or their schedule. I would call this task-based content. However, it is still unclear whether Siri can hold a conversation along the lines of OpenAI's GPT 4o.

The new Siri can be sneaky when you want, accepting text input (Type to Siri) that you can invoke with a new double-tap gesture at the bottom of the screen. I'm not sure if Apple is going overboard with all these glowing boxes and borders, but yes, the Siri-type box lights up. It's also accessible from virtually anywhere on the iPhone, including apps.

Photos

Apple is undoubtedly playing catch-up in the generative imaging space, especially in photo editing, where it finally applies the powerful subject elevation features introduced two generations of iOS ago and enhances them with Apple Intelligence to eliminate blurring. distractions from the background and then fill in the blanks. Like other Apple Intelligence features, Photo Cleanup will only extend to iPhones running the A17 Pro chip. Still, it's impressive AI programming.

The cleanup will live within Photos in Edit. Apple chose what appears to be an eraser icon to represent the feature. Yes, it's a kind of magic eraser. When you select it, a message tells you to “Touch, brush, or circle what you want to delete.”

In practice, the feature seems smart, easy to use, and quite powerful. I saw how you could surround an unwanted person in the background of the photo; It is not necessary to carefully surround only the distraction and not the topic. Apple Intelligence deliberately won't let you delete subjects. As soon as a distraction is flagged and identified, you press flag and it's gone. When Cleanup vaporized a couple from a nice landscape, it was cool and a little unsettling.

So that no one gets confused between pure photography and content assisted by Apple Intelligence, Apple adds a note to the meta information, “Apple Photo Cleanup.”

Instead of Apple automatically generating memory movies, Apple Intelligence lets you write a message that describes a set of, say, trips with a special person. You can even tell the system to include some type of photo like a landscape or a selfie.

When Apple introduced this feature to the stage, I noticed that all the cool animations I assumed were stage creations. I was wrong, watching Create a Memory Movie” is itself a visual treat, filled with squares of glossy photographs with images inside and out and text underneath that basically shows the work of Apple Intelligence.

The only bad news is that if you don't like what Create a Memory Movie created, you can't tell the system to modify the movie in a message. You have to start again.

Writing

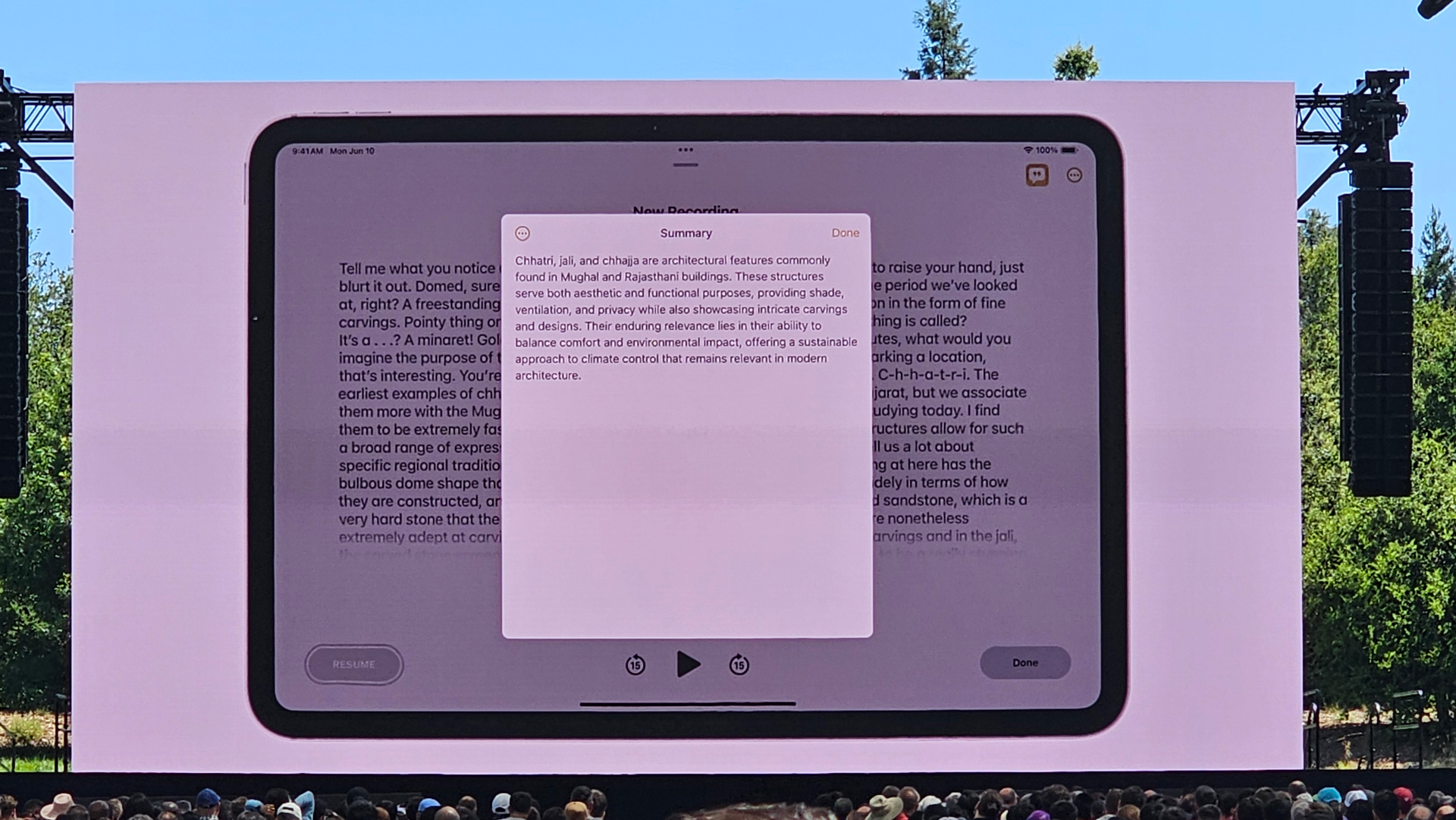

Apple Intelligence and its various local generative models are almost as ubiquitous as the platforms. AI tools can be found to enhance and assist typing in macOS Sequoia apps like Mail and Notes.

I was a little surprised to see how it works. If you type something in Notes or Mail, you must select a part of the text to enable it. From there, you get a small, color-coded app icon where you can access review and rewrite tools.

Apple Intelligence offers pop-ups to show your work and explain your options. He seems as adept at guiding his wiring to make it more professional or conversational as he is at putting together brief or bulleted summaries of any selected text.

Call a ChatGPT friend

Apple Intelligence works on-premises and with Private Compute Cloud. In most cases, Apple doesn't say when or if it will use its cloud. This is because Apple considers its cloud to be as private and secure as the AI on the device. You will only access that cloud when the query exceeds the local system.

When Apple Intelligent decides that a third-party model might be better suited to manage your practice, things are a little different.

When it's time to use OpenAI's ChatGPT to find out what you can cook with those beans you just photographed, Apple Intelligence will ask your permission to send the message to ChatGPT. At least you don't need to log in to ChatGPT and better yet, you can use the latest GPT-4o model.

The (magic) wand of the image

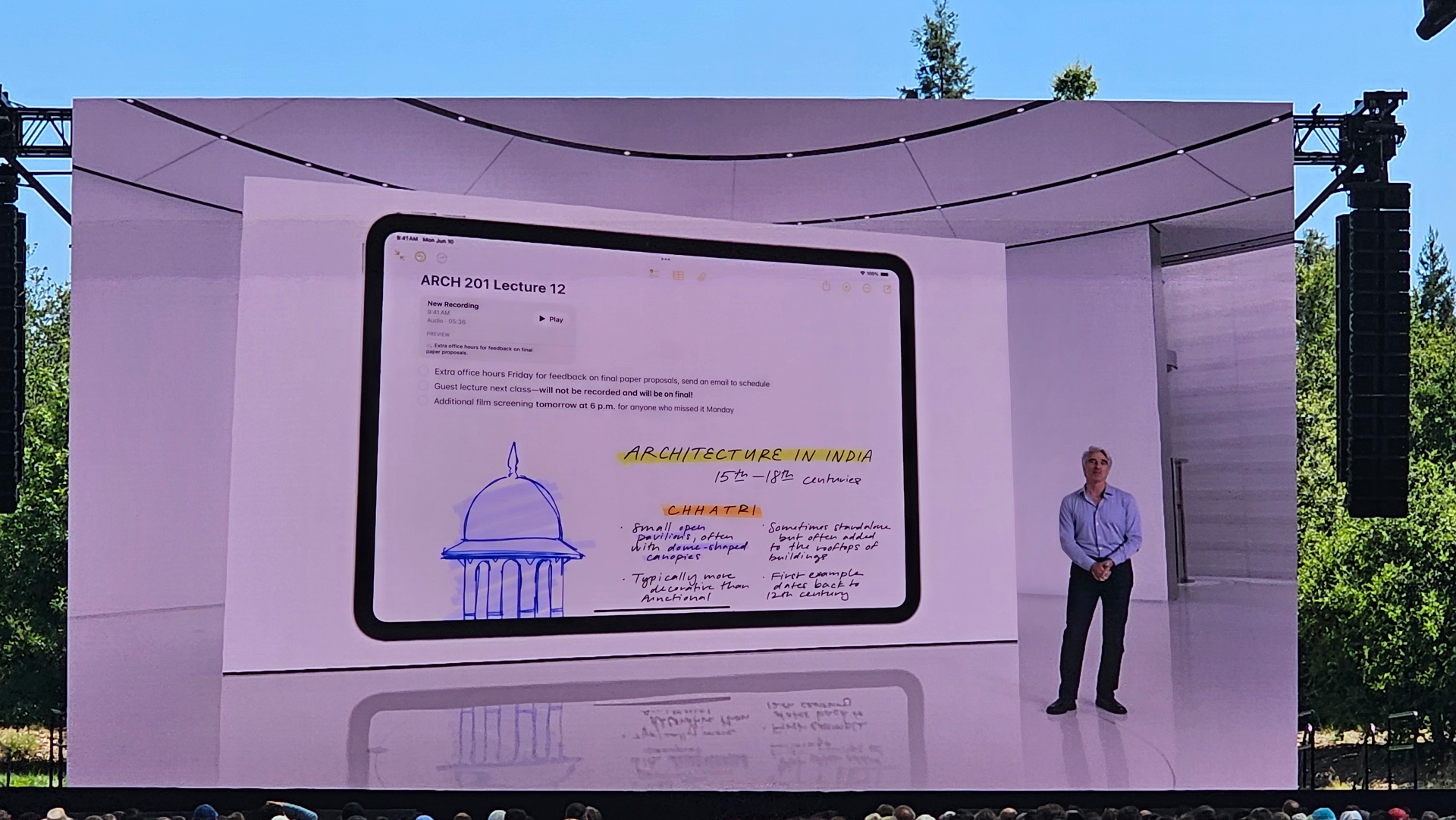

One of the coolest features I saw is Image Wand, a tool that works especially well on an iPad with Apple Pencil.

Apple didn't call it a “magic wand,” but what it can conjure is something magical.

In Notes, you can surround any content, for example a poorly sketched dog, and some words that describe the dog or what you would like it to do (“play with a ball”). Apple Intelligence works to generate a professional-looking image that combines both. Then the scribble and words become an illustration of a dog playing with a ball.

The most impressive thing is that you can circle the empty space next to your notes and the system will input a working image of the information. It's also pretty fast.

New reactions

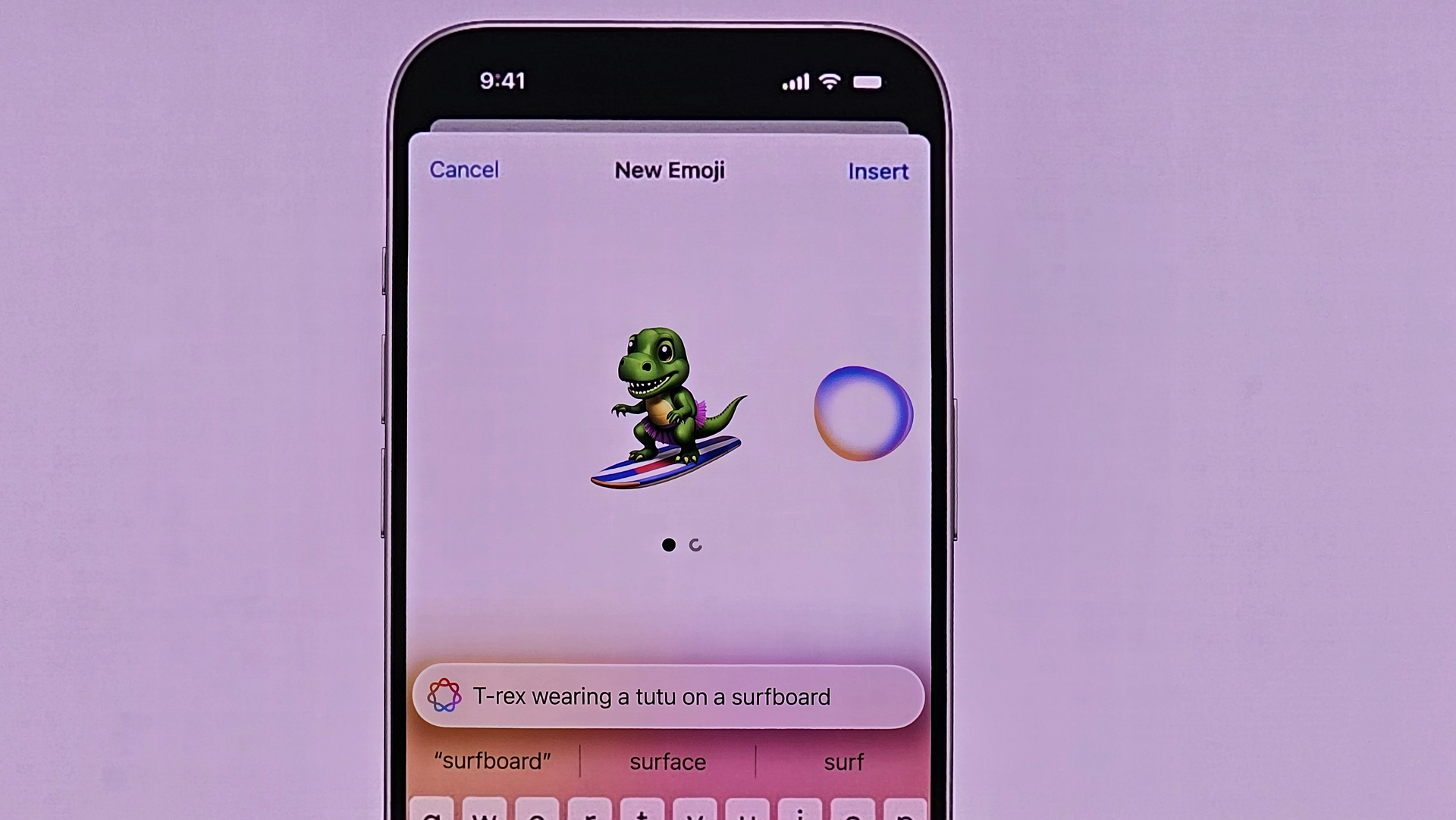

I also took a look at how the new Gemoji work. There are a limited number of official emojis you can have in messages, but Genmoji sets aside those limits and replaces them with your imagination.

Genmoji lives in iMessage and you simply tap an icon, enter your message and a cute image is created that can be shared with messages. I learned that if you try to send one of these Genmojis to someone who is not running the latest version of iOS, they may see that someone reacted to your message, but they will also receive the new Genmoji as a separate image message.

Naturally, all of this is just a taste of what will be possible with Apple Intelligence. It's expected to permeate iOS, macOS, and iPadOS, reaching your data, apps, home screens, and more. It can also change your experience in the Apple ecosystem like never before.