- Goal is developing its intelligent Gen 2 glasses, which are full of sensors and characteristics of AI

- Smart glasses can track their gaze, movement and even heart rate to evaluate what happens around them and their feelings about it.

- Smart glasses are currently being used to help researchers train robots and build better AI systems that could join smart glasses

The Ray-Ban Meta Smart glasses are still relatively new, but target is already increasing work with its new Smart Gen 2 Smart glasses. Unlike stripes, these smart glasses are only for research purposes, for now, but they are full of enough sensors, cameras and processing power that seems inevitable that part of what targets learns from them is incorporated into future portable devices.

The research level tools of the ARIA project, such as new smart glasses, are used by people who work in computer vision, robotics or any relevant hybrid of contextual and neuroscience that attracts the goal. The idea for developers is to use these glasses to design more effective methods so that navegen, contextualize and interact with the world.

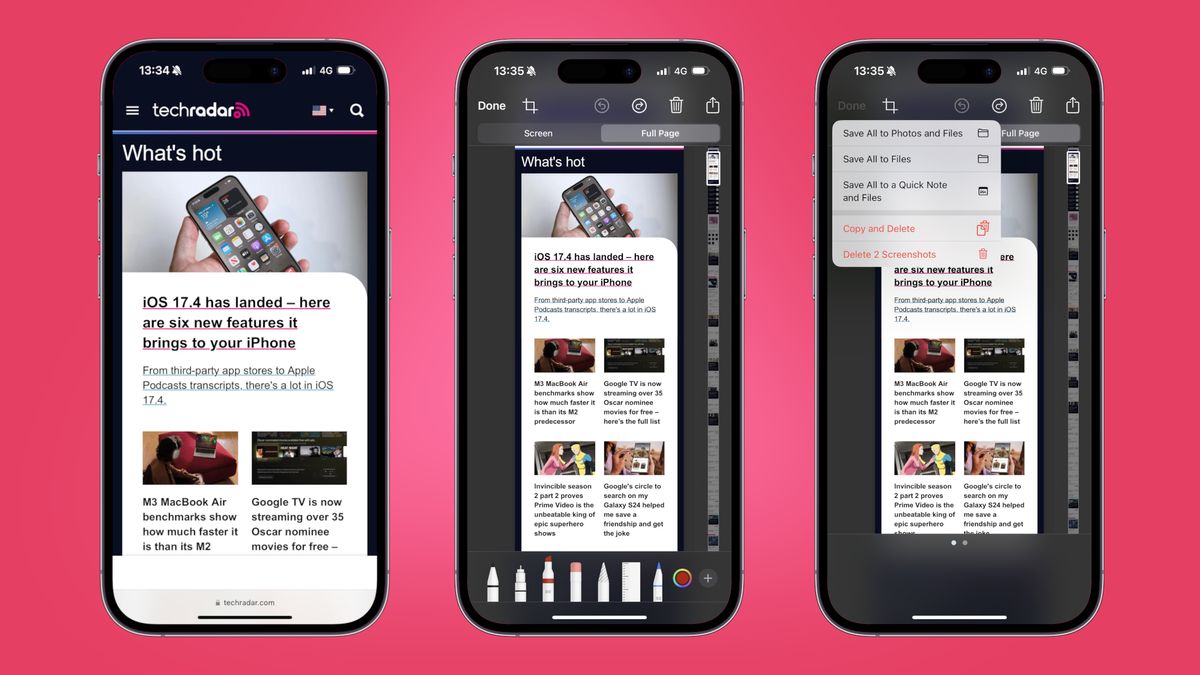

Aria's first smart glasses came out in 2020. The ARIA gen 2s are much more advanced in hardware and software. They are lighter, more precise, make more power and look much more like the glasses that people use in their usual lives, although they would not confuse them with a pair of standard glasses.

The four computer vision cameras can see an 80 ° arch around them and measure the depth and relative distance, so you can say so much how far your coffee cup is from your keyboard or where the landing train of a drone could be directed. That is only the beginning of the sensory equipment in the glasses, including an environmental light sensor with ultraviolet mode, a contact microphone that can collect your voice even in noisy environments and a pulse detector embedded in the nose pad that can estimate its heart rate.

Future facial clothing

There is also a lot of ocular monitoring technology, capable of knowing where he is looking, when he blinks, how his students change and what is focusing on. You can even track your hands, measuring the joint movement in a way that could help with the training of robots or learning gestures. Combined, glasses can discover what you are seeing, how an object is holding, and if what you are seeing is to obtain your heart rate due to an emotional reaction. If you are holding an egg and see your jury enemy, the AI could discover that you want to throw the egg and help you point it precisely.

As stated, these are research tools. They are not for sale for consumers, and goal has not said if they will ever be. Researchers must request access, and the company is expected to begin to take those applications at the end of this year.

But the implications are much larger. Meta plans for smart glasses go far beyond verifying messages. They want to link human interactions with the real world with machines, teaching them to do the same. Theoretically, those robots could look, listen and interpret the world that surrounds them as humans do.

It will not happen tomorrow, but the intelligent glasses Aria Gen 2 show that it is much closer than you think. And it is probably just a matter of time before some version of the ARIA Gen 2 ends up for sale to the average person. You will have that powerful brain of the sitting on your face, remembering where you left your keys and sent a robot to pick them up.