The next generation of AI will be powered by Nvidia hardware, the company declared after revealing its next generation of GPUs.

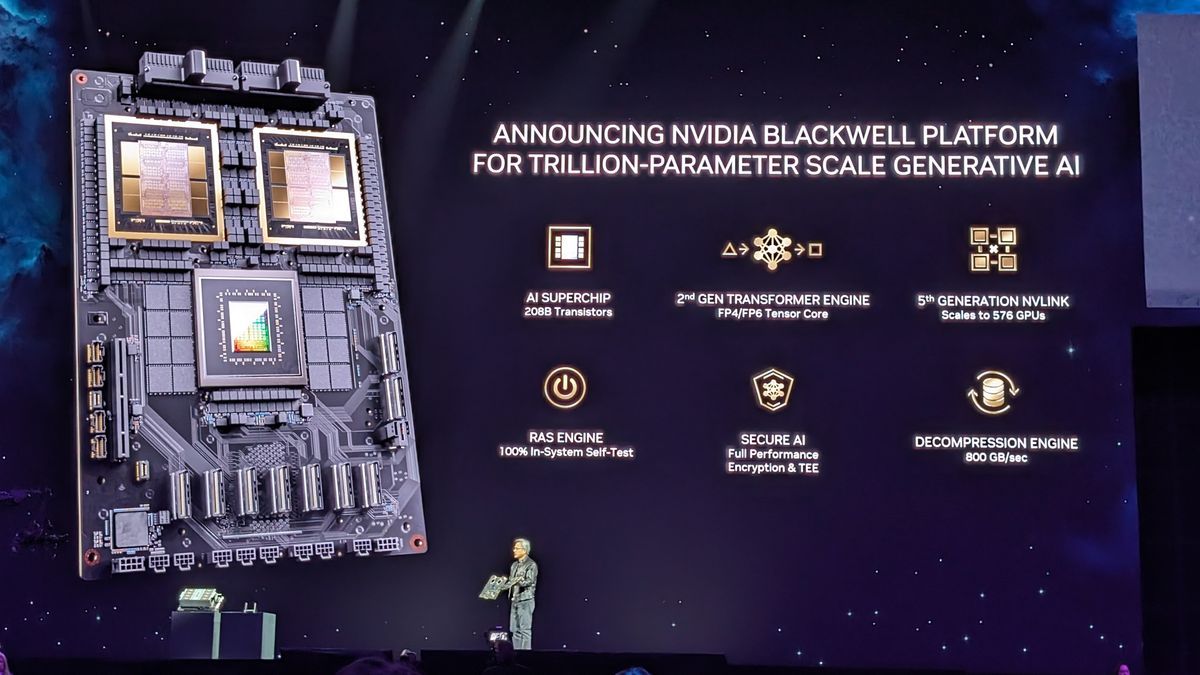

The company's CEO, Jensen Huang, today unveiled the new Blackwell chips at Nvidia GTC 2024, promising a big step forward in terms of AI power and efficiency.

Blackwell's first “superchip,” the GB200, will launch later this year, with the ability to scale from a single rack to an entire data center, as Nvidia looks to continue its lead in the AI race. .

NvidiaBlackwell

Huang noted that Blackwell contains 208 billion transistors (up from 80 billion in Hopper) across its two GPU dies, which are connected by 10 TB/second chip-to-chip, representing a significant step forward for the hardware. of the company with respect to his predecessor, Hopper. link on a single unified GPU.

This makes Blackwell up to 30 times faster than Hopper when it comes to AI inference tasks, offering up to 20 petaflops of FP4 power, far ahead of anything else on the market today.

During his opening speech, Huang highlighted not only the enormous jump in power between Blackwell and Hopper, but also the large difference in size.

“Blackwell is not a chip, it is the name of a platform,” Huang said. “Hopper is great, but we need bigger GPUs.”

Despite this, Nvidia says Blackwell can reduce costs and power consumption by up to 25 times, giving the example of training a 1.8 trillion parameter model, which would previously have required 8,000 GPU Hoppers and 15 megawatts of power. , but that can now be done with only 2,000 Blackwell GPUs consume only four megawatts.

The new GB200 brings together two Nvidia B200 Tensor Core GPUs and a Grace CPU to create what the company simply calls “a massive superchip” capable of boosting AI development, providing 7 times the performance and four times the training speed of a H10O. system.

The company also revealed a next-generation NVLink network switch chip with 50 billion transistors, which will mean 576 GPUs will be able to communicate with each other, creating 1.8 terabytes per second of bidirectional bandwidth.

Nvidia has already signed up a host of major partners to build Blackwell-powered systems, with AWS, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure already on board along with a host of big industry names.