Have you looked for something recently just to meet a nice diamond logo over some magically words that appear? The general description of Google's AI combines Google Gemini language models (which generate the answers) with the generation of aquatic recovery, which extracts the relevant information.

In theory, it has made an incredible product, Google's search engine, even easier and faster to use.

However, because the creation of these summaries is a two -step process, problems may arise when there is a disconnection between recovery and language generation.

While recovered information can be accurate, AI can produce erroneous leaps and draw strange conclusions when generating the summary.

That has led some famous gaffs, such as when it became the Internet laugh stock in mid -2014 to recommend glue as a way of making sure cheese does not slide from its homemade pizza. And we loved the time he described to run with scissors such as “a cardiovascular exercise that can improve its heart rate and require concentration and focus.”

These asked Liz Reid, director of Google Search, to publish an article entitled About last weekdeclaring these examples “highlighted some specific areas that we needed to improve.” More than that, she dipped diplomatically “meaningless consultations” and “satirical content.”

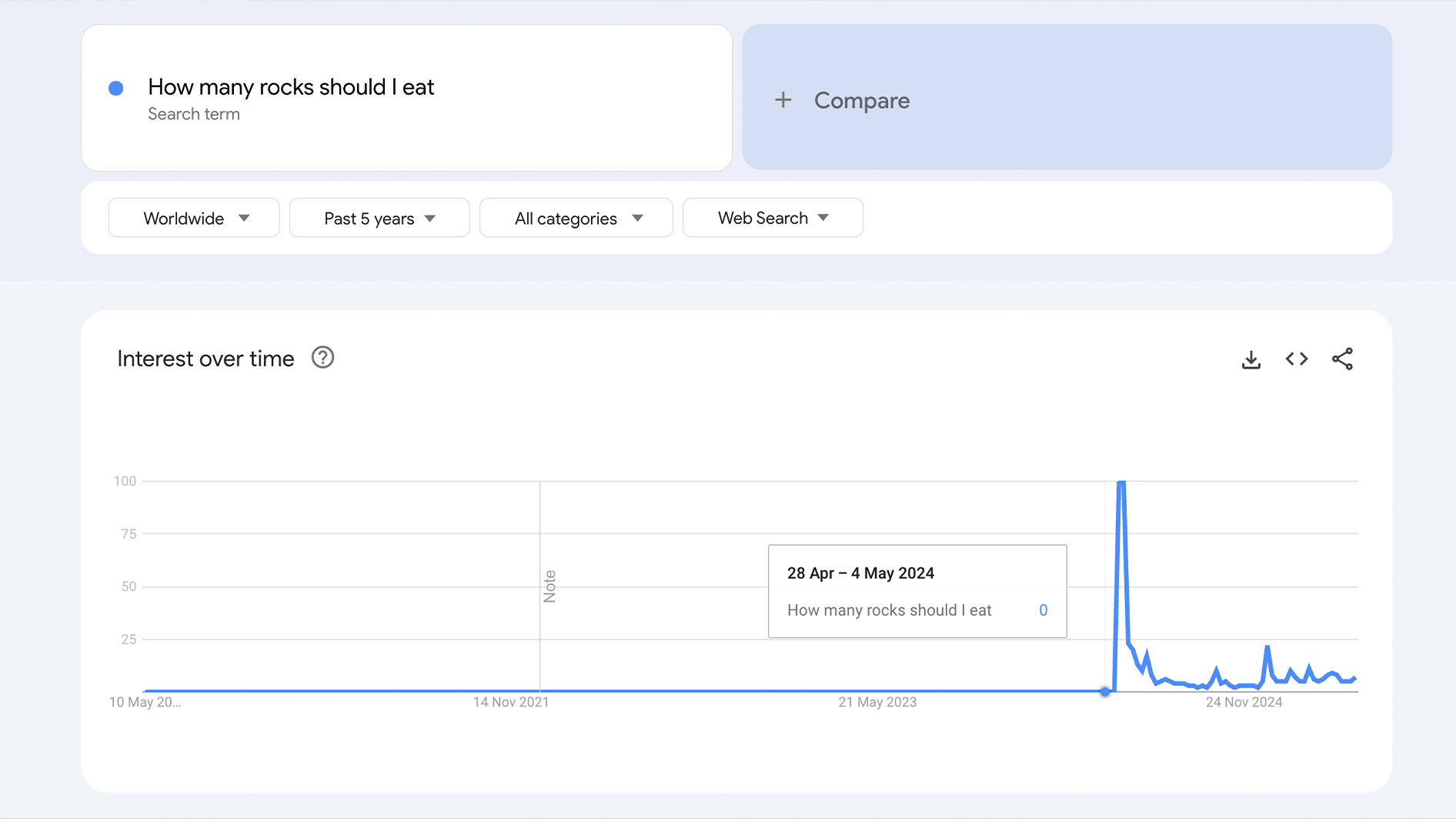

She had at least partly right. Some of the problematic consultations stood out purely in order to make the AI seem stupid. As you can see below, the consultation “How many rocks should I eat?” It was not a common search before the introduction of AI descriptions, and it has not been since then.

However, almost a year after the pizza-glue fiasco, people are still deceiving Google's descriptions in the manufacture of information or “hallucinating”, euphemism for the lies of AI.

Engadget reported that many deceptive consultations seem to be ignored, but last month he informed that the descriptions of AI would constitute explanations for simulated idioms such as “you cannot marry pizza” or “never rub the laptop of a basset hound.”

Then, AI is often wrong when you cheat it intentionally. What a thing. But, now that it is being used by billions and includes medical advice of public origin, what happens when a genuine question makes Alucine?

While AI works wonderfully if everyone who uses it examines where they obtained their information, many people, if not most people, are not going to do that.

And there lies the key problem. As a writer, the general description are already inherently a bit annoying because I want to read content written by humans. But, even leaving aside my pro-human bias, AI becomes seriously problematic if it is so easily unreliable. And it has become possibly dangerous now that it is basically ubiquitous when you are looking for, and a certain part of the users will take their information to the letter.

I mean, search years have trained us all to trust the results at the top of the page.

Wait … Is it true?

Like many people, sometimes I can fight with change. I didn't like LeBron went to the Lakers and I stayed with an MP3 player about an iPod for too long.

However, since now it is the first thing I see on Google most of the time, the general description of Google's AIs are a bit more difficult to ignore.

I have tried to use it as Wikipedia, potentially unreliable, but good to remind me of the forgotten information or to learn about the basic concepts of a topic that will not cause me agitated if it is not 100% precise.

However, even in seemingly simple consultations you can fail spectacularly. As an example, I was watching one movie the other week and this guy In fact Lin-Manuel Miranda looked like (creator of the musical Hamilton), So I looked on Google if I had any brother.

The overall vision of AI informed me that “yes, Lin-Manuel Miranda has two younger brothers called Sebastián and Francisco.”

For a few minutes I thought it was a genius to recognize people … until additional research showed that Sebastián and Francisco are actually the two sons of Miranda.

Wanting to give him the benefit of the doubt, I thought I would not have problems of Star Wars listing appointments to help me think of a headline.

Fortunately, he gave me exactly what I needed. “Hello!” And “it's a trap!”, And even cited “no, I'm your father” instead of the “Luke so repeated”, I'm your father. “

Together with these legitimate appointments, however, he said that Anakin had said “if I leave, I go with an explosion” before his transformation in Darth Vader.

I was surprised how I could be so bad … and then I began to guess myself. I began to think that I must be wrong. I was so insecure that I tripled the existence of the appointment and He shared it with the office, where it was quickly (and correctly) discarded as another Lunacy AI fight.

This little piece of doubt, about something as dumb as Star Wars scared me. What would happen if I had no knowledge about a subject that I was asking?

This study study is actually showing that Google's descriptions avoid (or respond with caution) issues of finance, politics, health and law. This means Google know That his AI is not up to the most serious consultations.

But what happens when Google thinks he has improved to the point that he can?

It is technology … but also how we use it

If you could trust all those who use Google to verify AI results, or click on the origin links provided by the general description, their inaccuracies would not be a problem.

But, as long as there is an easier option, a more friction path, people tend to take it.

Despite having more information within our reach than at any previous time in human history, in many countries our literacy and arithmetic skills are decreasing. Case in question, a 2022 study found that only 48.5% of Americans report having read at least one Book in the previous 12 months.

It is not the technology itself that is the problem. As is eloquently argued by the associated professor Grant Blashki, how we use technology (and in fact, how we go to use) is where problems arise.

For example, an observation study conducted by researchers from the McGill University of Canada found that the regular use of head doctors can cause a packed spatial memory, and an inability to navigate on your own. I can't be the only one that has used Google Maps to get somewhere and had no idea how to return.

Neuroscience has clearly demonstrated that fighting is good for the brain. Cognitive load theory establishes that your brain needs think About things to learn. It's hard to imagine fighting too much when you are looking for a question, read the AI summary and then call it one day.

Make the decision to think

I do not undertake to never use GPS again, but given the views to Google AI they are not regularly reliable, I would get rid of the descriptions of AI if I could. However, unfortunately there is no such method for now.

Even hacks how to add a Cuss word to your query no longer work. (And although using the word F still seems to work most of the time, it also makes the most strange and more, UH search results, 'adult oriented' that are probably not looking for).

Of course, I will still use Google, because it's Google. He will not reverse his ambitions of AI in the short term, and although he could wish to restore the option to opt for AI descriptions, perhaps the devil you know is better.

At this time, the only true defense against the wrong information is to make a concerted effort not to use it. Let you take notes from your work meetings or think about some collection lines, but when it comes to using it as a source of information, I will move it and look for an article of human quality (or at least verified) of the main results, as I have done for almost my entire existence.

I mentioned earlier that one day these AI tools could really become a reliable source of information. They could even be intelligent enough to assume politics. But today is not that day.

In fact, as reported on May 5 by the New York Times, as Google and Chatgpt's tools become more powerful, they are also becoming increasingly reliable, so I am not sure that I will ever trust them to summarize the policies of any political candidate.

When testing the hallucination rate of these 'reasoning systems', the highest registered hallucination rate was a huge 79%. AMR Awadalla, the Executive Director of Vectara, an AI agent and an assistant business platform, expressed it without surroundings: “Despite our best efforts, they will always hallucinate.”