Microsoft has already been dragged over the coals regarding its incoming Recall feature for Windows 11 by both security researchers and privacy advocates, and will need a fireproof suit for the latest wave of fire against the AI-powered feature.

This comes from security expert Kevin Beaumont, as The Verge highlights. The site notes that Beaumont briefly worked for Microsoft a few years ago.

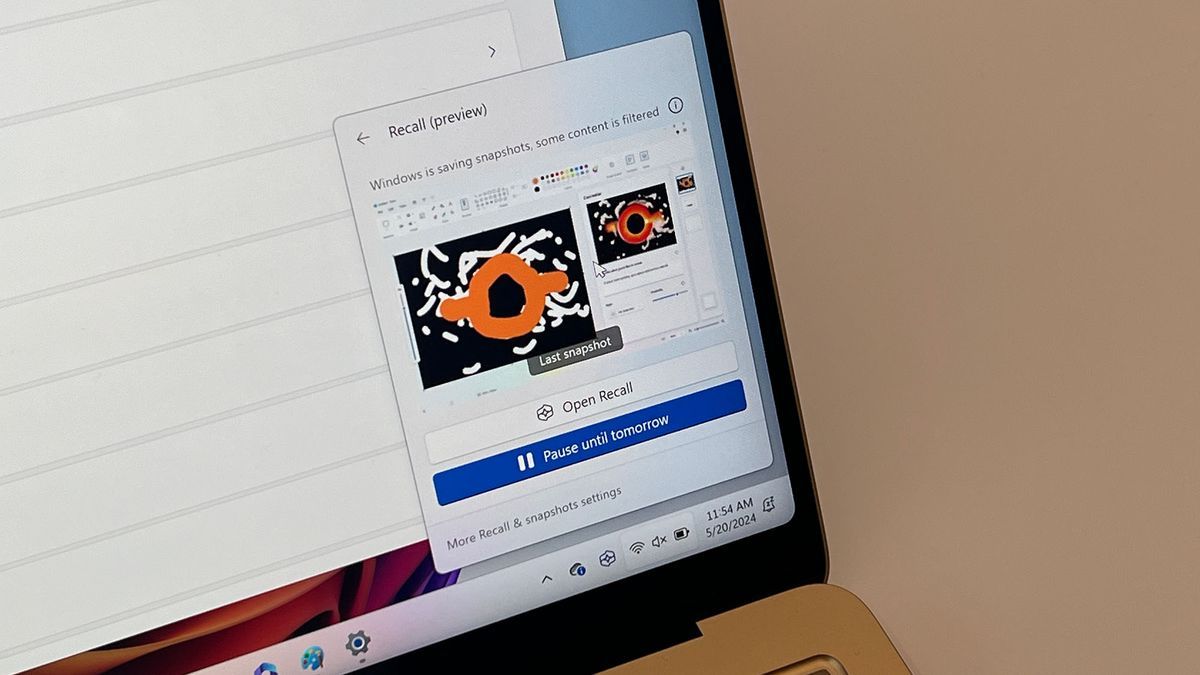

In short, in case you missed it somehow, Recall is an AI feature for Copilot+ PC, launching later this month and acting as a photographic timeline, essentially a history of everything you've done. done on your PC, recorded through screenshots that are taken. regularly in the background of Windows 11.

Beaumont got Recall working on a regular (non-Copilot+) PC, which can be done but not recommended performance-wise, and has been tinkering with it for a week.

It has come to the conclusion that Microsoft has made a big mistake here, at least following the feature as it is currently implemented (and is about to ship, of course). In fact, Beaumont claims that Microsoft is “probably going to burn down the entire Copilot brand because of how poorly it has been implemented and deployed,” no less.

So what's the big problem? Well, mainly, it's the lack of thought about security and how there's a major discrepancy between Microsoft's description of how Recall is apparently kept airtight and what Beaumont has found.

Microsoft told the media that a hacker cannot leak Copilot+ Recall activity remotely. Fact: How do you think hackers will extract this plain text database of everything the user has ever seen on their PC? Very easy, I have it automated.HT detective pic.twitter.com/Njv2C9myxQMay 30, 2024

As you can see from the post above on In other words, an attacker would need to access the device physically, in person, and this is not true.

In a long blog post on this topic, Beaumont explains: “This is wrong. Data can be accessed remotely.” Note that Recall works entirely locally, as Microsoft said; just that it's not impossible to access the data remotely, as suggested (if you can access the PC, of course).

As Beaumont explains, the other big problem here is the Recall database itself, which contains all the data from those screenshots and your PC's usage history, since all of this is stored in plain text (in a SQLite data).

This makes it very easy to collect all the information related to recovering exactly how you have been using your Windows 11 PC, assuming an attacker can access the device (either remotely or in person).

Analysis: Recover or Regret Feature

There are many other concerns here as well. As Microsoft noted when it revealed Recall, there are no limits to what can be captured in your AI-powered PC activity history (with a few small exceptions, like Microsoft Edge's private browsing mode, but not Chrome Incognito, so revealing). ).

Sensitive financial information, for example, will not be excluded, and Beaumont further notes that messages that are automatically deleted on messaging apps will also be screenshotted, so they can be accessed through a database of Stolen recall. In fact, any message you delete from WhatsApp, Signal, or any other site could be read by a recovery commit.

But wait a minute, you might be thinking: if a hacker remotely accesses your PC, aren't you in serious trouble anyway? Well, yes, that's true: it's not like these Recall details can be accessed unless your PC is actively exploited (although part of Beaumont's problem is Microsoft's apparently erroneous statement that any kind of remote access to the Recall data was not possible at all, as mentioned above).

The real trick here is that if someone gets into your PC, Recall apparently makes it very easy for that attacker to obtain all of these potentially very sensitive details about your usage history.

While there are already information-stealing Trojans that continually attack victims on a large scale, Recall could allow this type of personal data collection to be done ridiculously quickly and easily.

This is the crux of the criticism, as Beaumont explains: “Recovery allows threat actors to automate the removal of everything you've seen in a matter of seconds. During the test with an available information thief, I used Microsoft Defender for Endpoint, which detected the available information thief, but by the time the automatic fix was activated (which took more than ten minutes), my recovery data was already long gone.” .

This is one of the main reasons why Beaumont calls Recall “one of the most ridiculous security flaws I've ever seen.”

If Microsoft doesn't take action before its launch, be careful, as there is still time, in theory at least, although the launch of the Copilot+ PCs is very close. (However, Recall could still be temporarily kicked to play while more work is done on it, perhaps.)

If Recall ships as currently implemented, Beaumont recommends turning it off: “Also, to be very clear, you can turn this off in Settings when it ships, and I highly recommend you do that unless they change the feature and experience.”

Here lies another thorny issue: AI-powered functionality is enabled by default. Recovery is highlighted during the Copilot+ setup experience on PC and you can turn it off, but the way it's implemented means you have to check a box to enter settings after setup and then turn off recovery there; otherwise it will simply be left in. And some Windows 11 users will probably fall into the trap of not understanding what the checkbox option means during setup and end up with recovery turned on by default.

This is not the way a feature like this should work, particularly given the privacy concerns highlighted here, and we've already made our opinion on the matter pretty clear. Anything with wide-ranging capabilities like Recall should be disabled by default, surely, or users should have a very They were presented with a clear choice during setup. It's not some weird 'check this box, jump through this hoops later' kind of antics.

You might also like…