OpenAI just held its long-awaited Spring Update event, making a series of exciting announcements and demonstrating the amazing capabilities of its newest GPT AI models. There were changes in the availability of the model for all users, and at the center of expectation and attention: GPT-4o.

Arriving only 24 hours early Google I/O, the launch puts Google's Gemini in a new light. If GPT-4o is as impressive as it seemed, Google and its early Gemini update better be mind-blowing.

What's all this fuss about? Let's dive into all the details of what OpenAI announced.

1. The announcement and demonstration of GPT-4o, and it will be available to all users for free.

The biggest announcement of the broadcast was the introduction of GPT-4o (the 'o' stands for 'omni'), which combines real-time audio, image and text processing. Over time, this version of OpenAI's GPT technology will be available to all users for free, with usage limits.

For now, however, it's rolling out to ChatGPT Plus users, who will get up to five times the messaging limits of free users. Team and Enterprise users will also get higher limits and faster access.

GPT-4o will have the intelligence of GPT-4, but will be faster and more responsive in everyday use. Additionally, you can provide or ask it to generate any combination of text, image and audio.

In the broadcast, OpenAI CTO Mira Murati and two researchers, Mark Chen and Barret Zoph, demonstrated GPT-4o's real-time responsiveness in conversation while using its voice functionality.

The demo began with a conversation about Chan's mental state, with GPT-4o listening and responding to his breathing. He then told Barret a bedtime story with increasing levels of drama in his voice when he asked; They even asked him to speak like a robot.

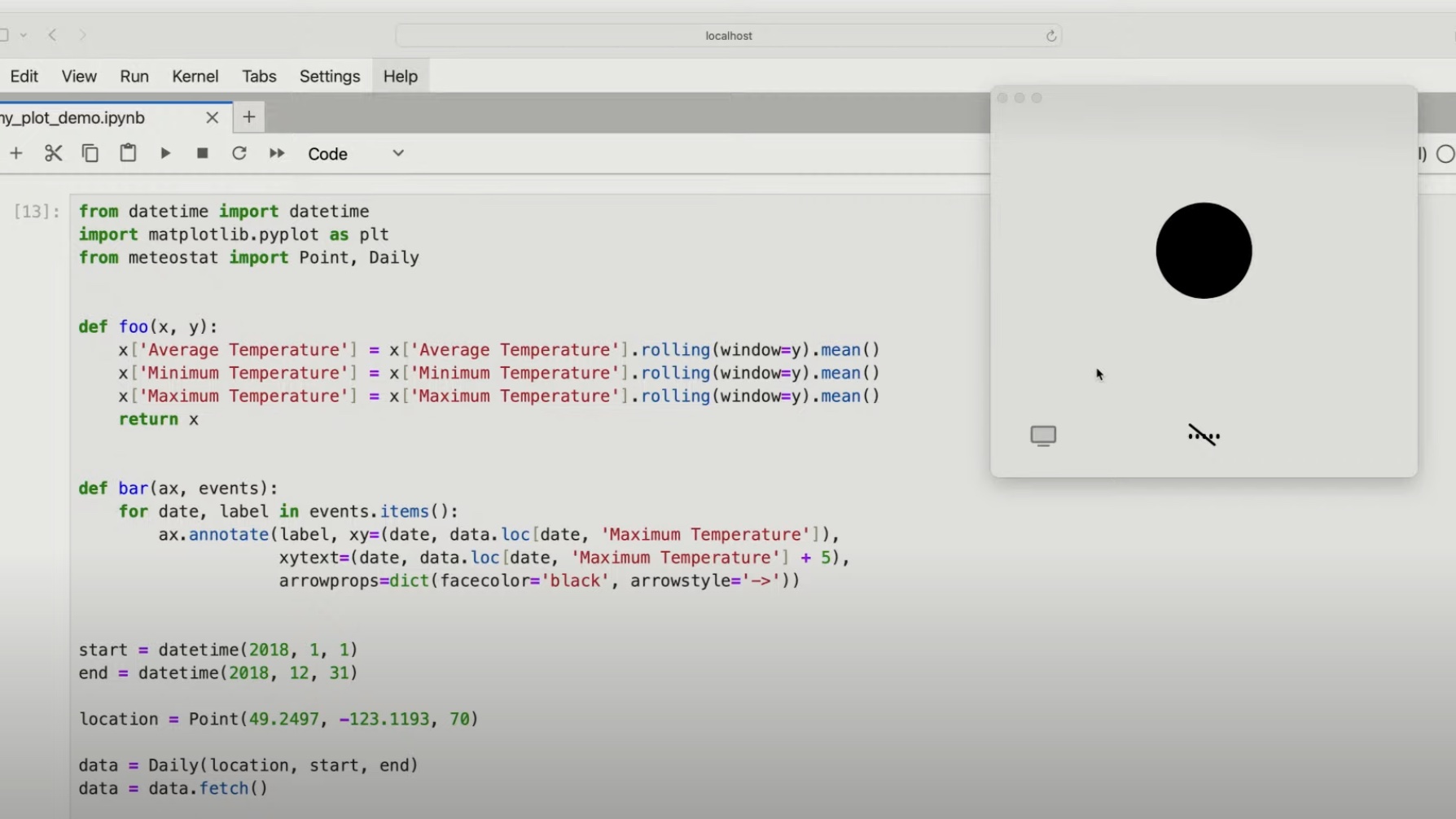

It continued with a demonstration of Barret “showing” GPT-4o a math problem and the model guiding Barret to solve it by providing suggestions and encouragement. Chan asked why this specific mathematical concept was useful, to which he responded in detail.

They continued to show GPT-4o some code, which they explained in plain English, and provided feedback on the plot that generated the code. The model talked about notable events, axis labels, and a variety of inputs. This was to show OpenAI's continued belief in improving the interaction of GPT models with codebases and improving their mathematical capabilities.

The penultimate demonstration was an impressive demonstration of the GPT-4o's linguistic abilities, as it translated aloud two languages (English and Italian) simultaneously.

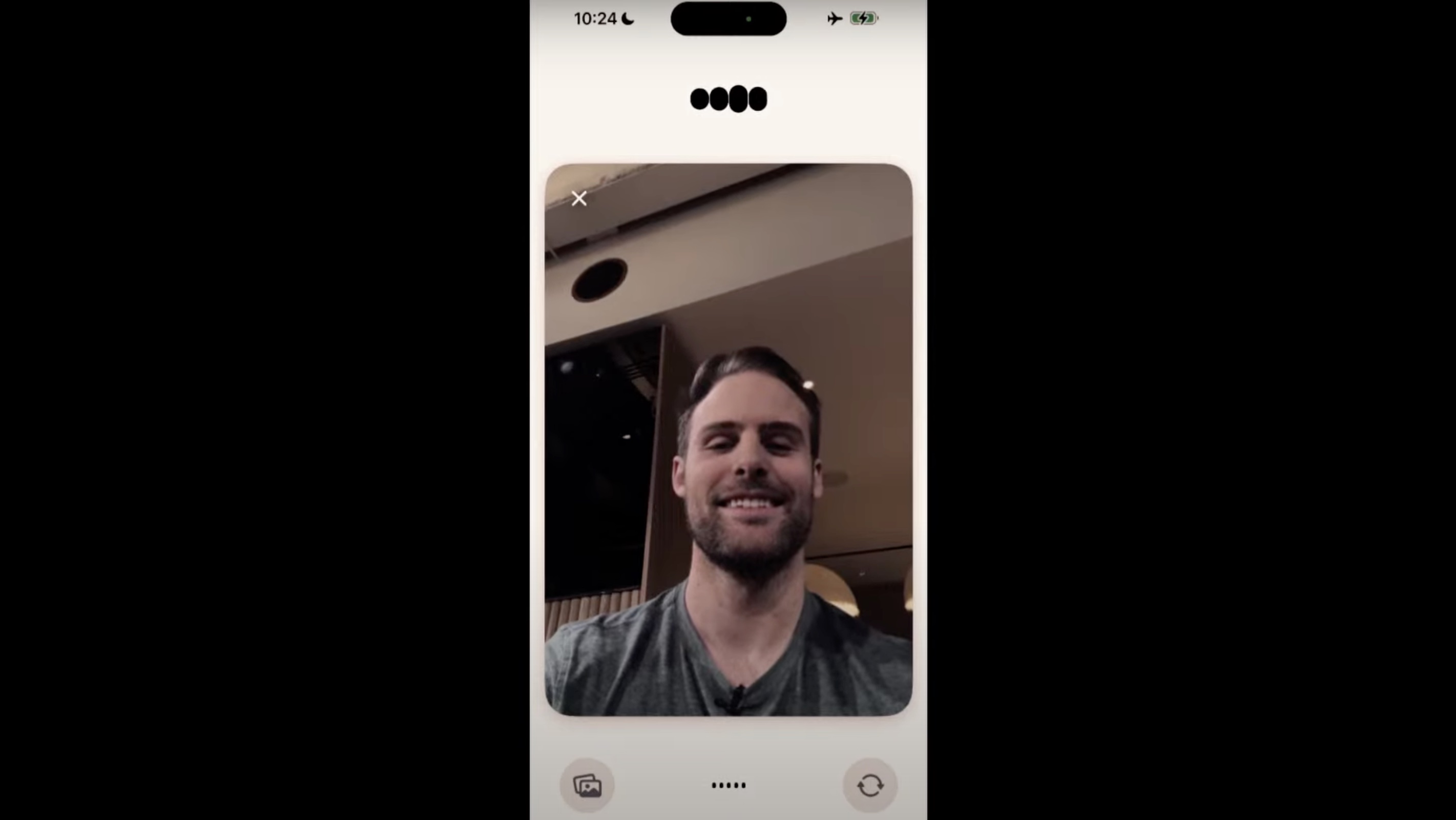

Finally, OpenAI provided a brief demonstration of GPT-4o's ability to identify emotions from a selfie sent by Barret, noting that he seemed happy and cheerful.

If the AI model works as demonstrated, you will be able to speak more naturally than many generative AI voice models and other existing digital assistants. You'll be able to interrupt him instead of having a conversation taking turns, and he'll continue processing and responding, similar to how we talk to each other naturally. In addition, the lag between the query and the response, which was previously two or three seconds, has been drastically reduced.

ChatGPT equipped with GPT-4o will be released in the coming weeks and you will be able to try it for free. This comes a few weeks after Open AI made ChatGPT available to try out without needing to sign up for an account.

2. Free users will have access to GPT store, memory function, scan function and advanced data analysis.

GPTs are custom chatbots created by OpenAI and ChatGPT Plus users to help enable more specific conversations and tasks. Now, many more users can access them in the GPT Store.

Additionally, free users will be able to use ChatGPT's memory functionality, making it a more useful and useful tool by giving it a sense of continuity. Also added to the plan at no cost is ChatGPT's vision capabilities, which allow you to chat with the bot about uploaded items such as images and documents. The browse feature allows you to search for previous conversations more easily.

ChatGPT's capabilities have improved in quality and speed across 50 languages, supporting OpenAI's goal of bringing its powers to as many people as possible.

3. GPT-4o will be available in API for developers

The latest OpenAI model will be available for developers to incorporate into their AI applications as a text and vision model. Support for GPT-4o's video and audio capabilities will be released soon and will be offered to a small group of trusted partners in the API.

4. The new ChatGPT desktop app

OpenAI is launching a desktop app for macOS to further its mission of making its products as easy and simple as possible, wherever you are and whatever model you're using, including the new GPT-4o. You can assign keyboard shortcuts to carry out processes even faster.

According to OpenAI, the desktop app is now available for ChatGPT Plus users and will be available to more users in the coming weeks. It also has a similar design to the mobile app's updated interface.

5. An updated ChatGPT UI

ChatGPT is getting a more natural and intuitive user interface, updated to make interacting with the model easier and less jarring. OpenAI wants to get to the point where people barely focus on AI and you feel that ChatGPT is more friendly. This means a new home screen, message layout, and other changes.

6. OpenAI is not finished yet

The mission is bold: OpenAI seeks to demystify technology while creating some of the most complex technologies most people can access. Murati concluded by stating that we will soon receive an update on what OpenAI is preparing to show us next and thanked Nvidia for providing the most advanced GPUs to make the demonstration possible.

OpenAI is determined to shape our interaction with devices, closely studying how humans interact with each other and trying to apply its insights to its products. The latency of processing all the different nuances of interaction is part of what dictates how we behave with products like ChatGPT, and OpenAI has been working hard to reduce this. As Murati says, its capabilities will continue to evolve and get even better to help you with exactly what you're doing or asking at exactly the right moment.