That voice you hear, even one that you recognize, may not be real, and you may have no way of knowing. Voice synthesis is not a new phenomenon, but an increasing number of applications is free is putting this powerful voice cloning capacity in the hands of common people, and ramifications could be of great scope and unstoppable.

A recent Consumer Reports study that analyzed half a dozen of these tools puts risks in relief. Platforms such as Elevenlabs, Spite, resemble AI and others use powerful voice synthesis models to analyze and recreate voices, and sometimes with small safeguards in their place. Some attempt: Description, for example, requests the voice consent recorded before the system recreates a voice firm. But others are not so careful.

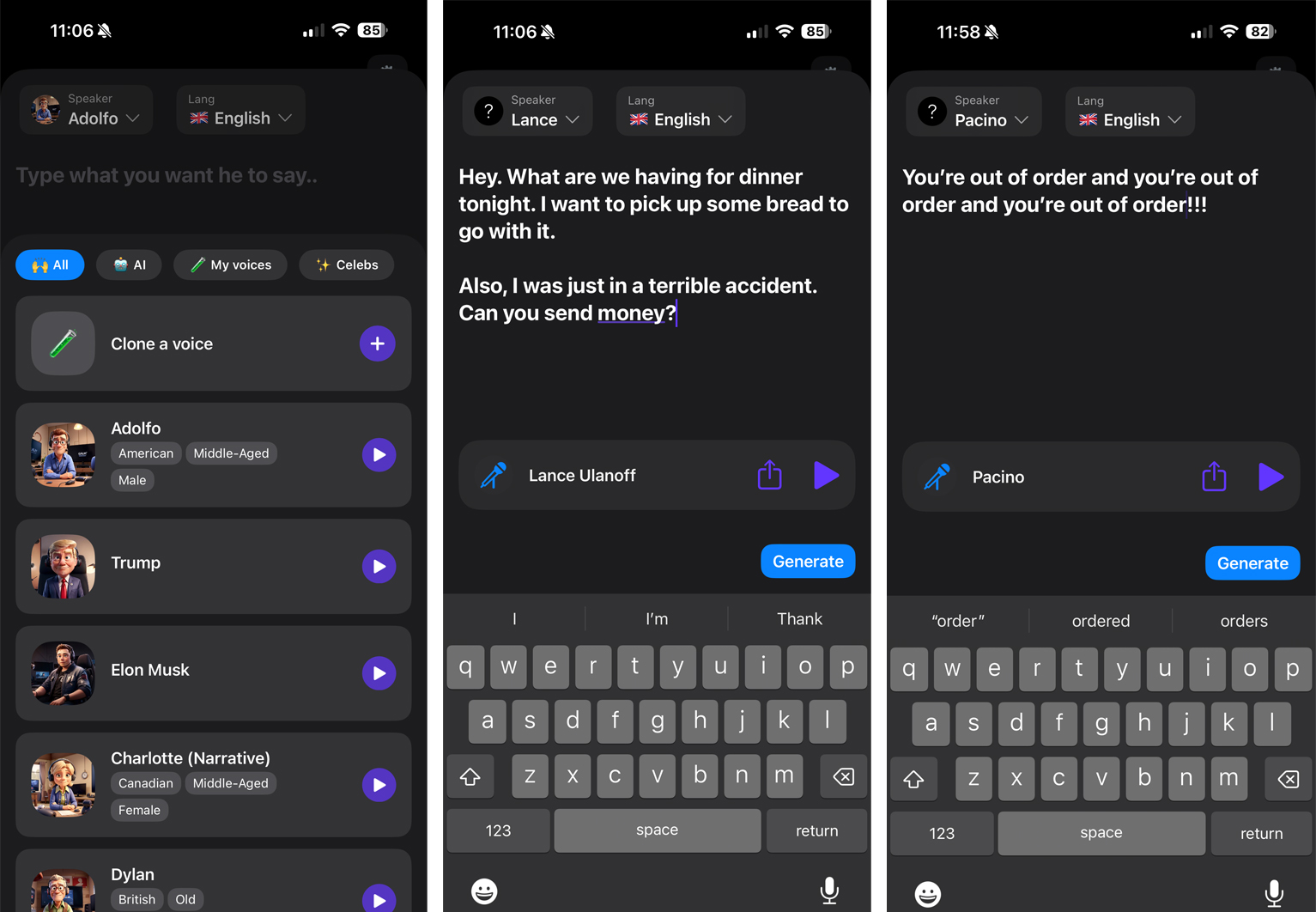

I found an application called Playkit from Play.ht that will allow you to clone a free voice for three days and then charge you $ 5.99 per month. The payment wall is, in theory, a barrier against potential misuse, except that I could clone a voice without starting the trial.

Say, 'too easy'

The application takes it through the configuration and then presents some prefabricated voice clones, including those of President Donald Trump and Elon Musk (yes, he can make the president say things such as: 'I think that Dei should be supported and expanded throughout the world “). But at the top there is an option' Voice clone '.

All I had to do was select a video from my photo library and upload it. The videos must have at least 30 seconds (but no more than a minute) and in English. He could have chosen with anyone and, if he had filmed, say, a clip of an interview by George Clooney, could have uploaded that (more about that later).

The system quickly analyzed the audio. The application does not tell you if this is being done locally or in the cloud, but I will assume that the latter, since such powerful models rarely work locally on a mobile device (see chatgpt in Apple Intelligence). I kept my voice clone with my name to be able to select it again in the list of cloned voices.

When I want my clone to say something in my voice, I simply write the text and press a large generation button. That process generally takes 10 to 15 seconds.

The voices generated by Playkit, including mine, are disturbingly precise. If I have a criticism, it is that the tone and emotion are a bit out. It cloned to me sounds the same if you are talking about what to pick up for dinner or say that you have been in a terrible car accident. Even exclamation points do not change the expression.

And yet I could see people cheated for this. Remember, anyone with access to 30 seconds of video speaking could effectively clone your voice and then use it as you wish. Of course, they would have to pay $ 5.99 a month to continue using it, but if someone is planning a financial scam, they might think it is worth it.

Platforms such as this that do not require an explicit permit for voice cloning surely proliferate, and my concern is that there are no safeguards or regulations in sight. Services as a description, which require audio consent of the clone objective, are atypical values.

Play.ht states that it protects people's voice rights. Here is an extract of its ethical page:

Our platform values intellectual property rights and personal property. Users are allowed to clone only their own voices or those for which they have explicit permission. This strict policy is designed to avoid any possible infraction of copyright and maintain a high level of respect and responsibility.

It is a promise of great mentality, but the reality is that I began to record 30 seconds clips of famous Benedict Cumberbatch and Pacino movies, and in less than a minute, they had usable voice clones for both actors.

What is needed here is the overall regulation of AI, but that needs agreement and cooperation at the governmental level, and now that is not coming. In 2023, then President Joe Biden signed an executive order on AI that partly sought to offer some regulatory guidance (he continued with another order related to AI earlier this year). The Trump administration is allergic to government regulation (and any executive order of Biden) and revoked it quickly. The problem is that you still have to propose anything to replace it. It seems that the new plan is to expect the companies to be good digital citizens, and at least try not to harm.

Unfortunately, most of these companies are like arms manufacturers. They are not damaging people directly, no one who makes a voice calls their old uncle and convinces him with his voice clone that he urgently needs connecting thousands of dollars, but some people who use their weapons of ia are.

There is no easy solution for what I fear will become a voice cloning crisis, but I suggest that you no longer trust the voices you hear in videos, on the phone or in voice messages. If you have any questions, contact the relevant person.

Meanwhile, I hope that more voice platforms insist in the voice and / or documented permit before the users will clone the voice of any person.