- Google expands Gemini AI access to students under 18, but with safeguards

- Includes AI literacy tools, fact verification and more strict content moderation

- However, he raises new questions about the long -term role of AI in global educational systems.

When the calculators first entered classrooms, many worried that students' mathematical skills weakened. The arrival of the Internet and smartphones brought similar fears about plagiarism and distraction, and now, AI tools are taking their turn at the center of attention.

With the deployment of Google Gemini application for all Google education users, including students under 18, those old concerns are resurfaceing in a new form.

Gemini promises to help with everything, from lesson planning to real -time feedback, but its expansion also raises difficult questions about the long -term role of AI in education and how it can remodel learning by itself.

Stricter content policies

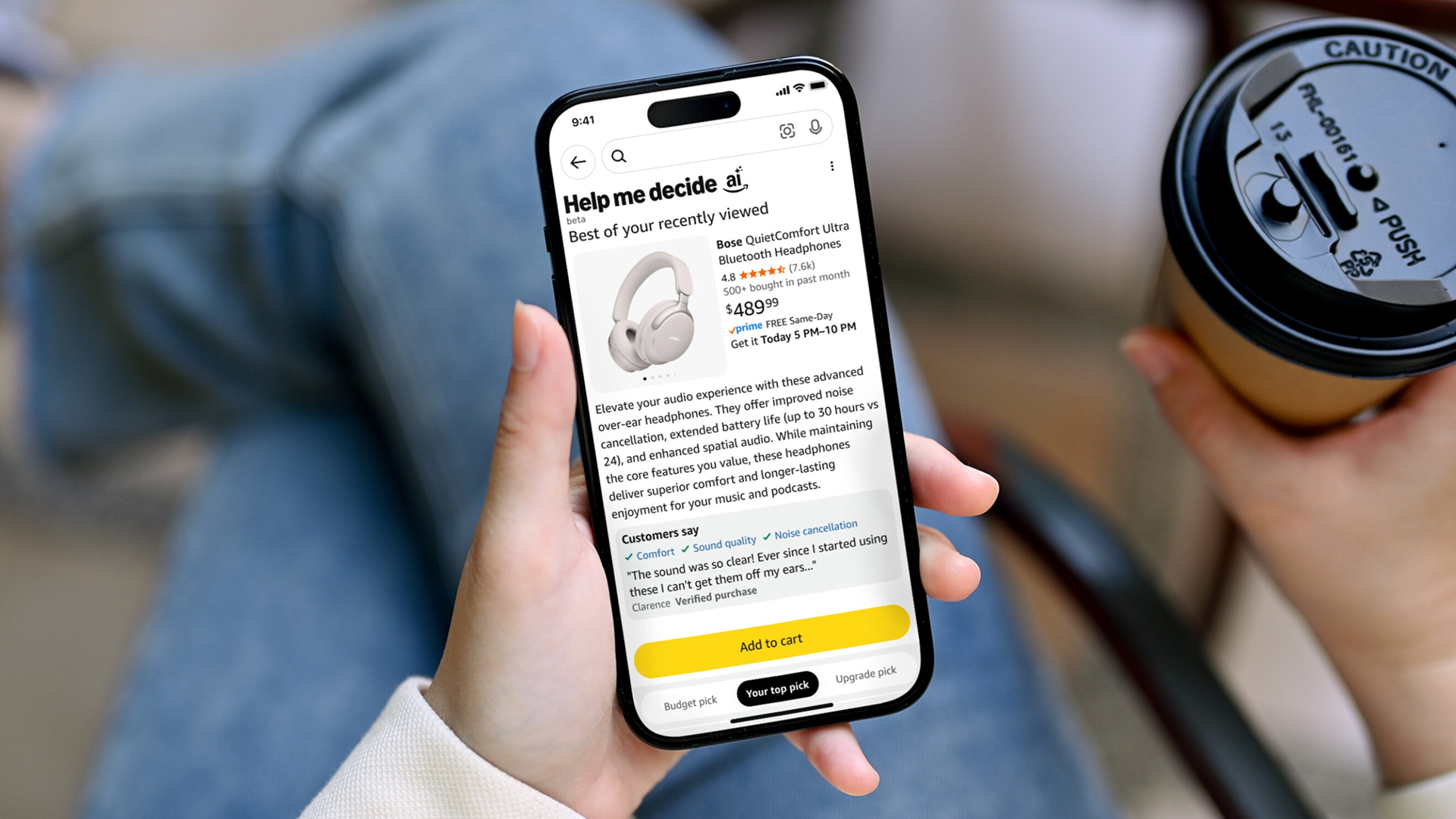

Google says that the objective is to support creativity, learning and the responsible use of AI, since Gemini includes Learnlm, a family of models of the tune for education and developed with contributions from teaching experts.

These models are created to handle tasks such as helping students make a brainstorm, verify their understanding or generate practice materials.

For students under 18, Gemini has more strict content policies and literacy tools for groups such as Connectsafely and the online family Safety Institute.

Users for the first time are guided through incorporation content that explains how to use AI responsible.

To reduce the risk of misinformation, Gemini includes a fact verification function. When a student as a question based on facts, the tool executes a double verification response using Google Search.

This automatically happens the first time and the student can activate later if necessary.

Privacy and security have been emphasized by Google in the deployment, saying that Gemini for Education follows the same data protection terms as the rest of the work space for education.

In a nutshell, that means that student data is not used to train AI models or reviewed by humans.

The application is also aligned with education and privacy regulations such as Ferpa, Coppa, Hipa and Fedramp.

That said, some educators and parents remain insecure of how AI will affect the participation and thought of the students, and it is something that we have covered much earlier.

Google Gemini can save time and increase productivity, but there are great questions about whether students could trust him too much or if he could change how learning is evaluated.