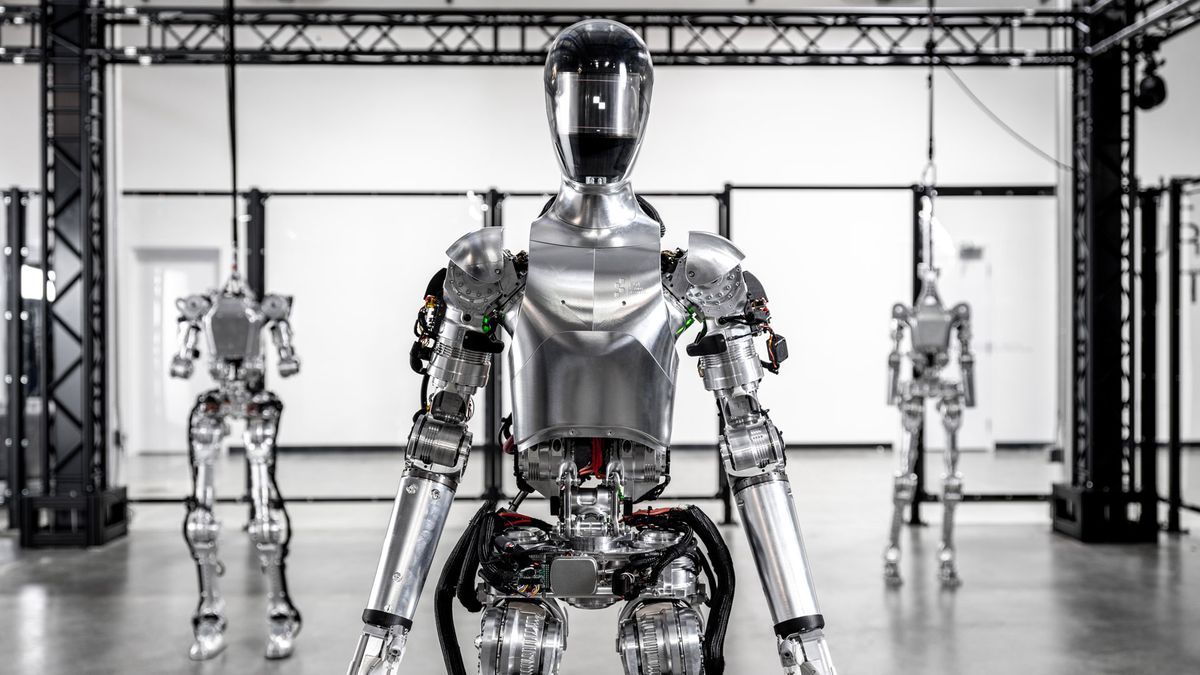

The development of humanoid robotics has moved at a snail's pace for the better part of two decades, but it's rapidly accelerating thanks to a collaboration between Figure AI and OpenAI, the result of which is the most amazing real humanoid robot video I've seen anywhere. my life.

On Wednesday, robotics startup Figure AI released a video update (see below) of its Figure 01 robot running a new visual language model (VLM) that somehow transformed the robot from an uninteresting automaton to a robot. Full-fledged science fiction. which is approaching C-3PO level capabilities.

In the video, Figure 01 is behind a table with a plate, an apple and a cup. To the left is a drainer. A human stands in front of the robot and asks: “Figure 01, what do you see now?”

After a few seconds, Figure 01 responds with a remarkably human voice (there is no face, just an animated light that moves in sync with the voice), detailing everything on the table and the details of the man standing in front of it. to her.

“That's great,” I thought.

Then the man asks, “Hey, can I eat something?”

Figure 01 responds, “Sure,” and then, with one deft fluid movement, takes the apple and hands it to the boy.

“Wow,” I thought.

The man then empties some crumpled debris from a container in front of Figure 01 while asking, “Can you explain why you did what you just did while picking up this trash?”

Figure 01 wastes no time explaining his reasoning as he places the paper back in the trash can. “Then I gave you the apple because it is the only edible food I can give you from the table.”

I thought, “This can't be real.”

However, it is at least according to Figure AI.

Speak to voice

The company explained in a statement that Figure 01 engages in “speech-to-speech” reasoning using OpenAI's pre-trained multimodal model, VLM, to understand images and text and relies on a full voice conversation to craft its responses. This is different from, say, OpenAI's GPT-4, which focuses on written prompts.

It also uses what the company calls “low-level learned bimanual manipulation.” The system combines precise image calibrations (down to the level of a pixel) with its neural network to control movement. “These networks take integrated images at 10hz and generate 24 degrees of freedom actions (wrist postures and finger joint angles) at 200hz,” Figure AI wrote in a statement.

The company claims that every behavior in the video is based on system learning and is not teleoperated, meaning there is no one behind the scenes manipulating Figure 01.

Without seeing Figure 01 in person and asking my own questions, it is difficult to verify these claims. There is a chance that this is not the first time Figure 01 has performed this routine. It could have been the hundredth time, which could explain its speed and fluidity.

Or maybe this is 100% real and in that case, wow. Just wow.