Google AI Overviews is a feature of Google Search that provides AI-generated summaries and information at the top of search results. It is powered by Google's custom AI model, Gemini, and is designed to help users quickly find detailed, contextually relevant information from a variety of online sources.

The following guide is designed to help you understand overviews of Google AI, how it works, and how it aligns with Google's Generative Search Experience initiative.

Understanding Google AI Overviews

Google AI Overviews was previously part of SGE, a feature within Google Search that allowed users to leverage Google's generative AI technology for text- and image-based searches. This has since evolved into a broader experiment that expands Google's generative AI capabilities to more parts of the search experience.

Google AI Overviews relies on software giant Google Gemini's custom large language model to provide instant-like answers to users' search queries (hence “overviews”). Because Gemini is integrated into Google's core web ranking system, AI Overviews can search and extract relevant information from Google's own index.

The system is designed to make it easy for users to quickly find the information they need without having to search through multiple sources. For example, if you're looking for information about climate change, AI Overviews can provide a summary answer with key facts and statistics, along with links to further reading. By doing so, AI Overviews reduces the effort users must put into searching for information by doing all the legwork for them, or so the theory goes.

Key Features of Google AI Overviews

Multi-step reasoning

AI Overviews can handle complex, multi-party user queries by linking related information and, as a result, providing more nuanced answers. For example, you can search for “Show me the best gyms within a 30-minute drive with no sign-up fees” and AI Overviews will show you the gyms nearby, their distance from you, and any relevant sign-up offers (Figure A).

Likewise, ask “What are good options for a day in Dallas with the kids?” Recommend some ice cream shops near each option,” AI Overviews may respond with a list of family-friendly ideas, each accompanied by nearby ice cream shops and a map showing their locations.

Aids for planning and brainstorming

Beyond answering questions, AI Overviews can help users plan activities or gather project ideas by adding relevant information and resources. For example, you can search for “Create a five-day protein-rich meal plan that's easy to prepare” and AI Overviews will aggregate recipes from around the web to give you a starting point. From there, you can customize responses, such as asking about vegetarian alternatives, and adding the necessary ingredients to your shopping list.

Starting this summer, users will also be able to use AI Overviews to plan trips. This was demonstrated by Sissie Hsiao, Google Vice President and General Manager of Gemini Experiences, during Google I/O.

During a rally, Hsiao set an example: “My family and I are going to Miami for Labor Day. My son loves art and my husband likes lots of fresh seafood. Can you get my flight and hotel information from Gmail and help me plan the weekend? Gemini will then gather information from Maps, Search and Gmail to provide a personalized itinerary, taking into account things like flight times and the distance from the hotel to nearby places suitable for dining.

Google plans to add more personalization options capabilities later this year, including richer recommendations for less specific messages. For example, “Dinner Places in Dallas for Anniversary Celebrations” could highlight places with a more romantic atmosphere or, weather permitting, places with rooftop dining.

SEE: Artificial Intelligence: Cheat Sheet

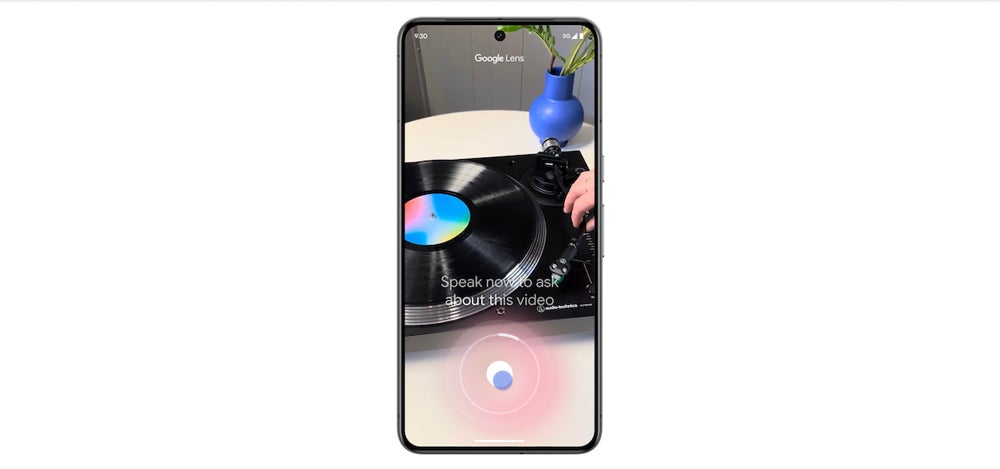

Video-based search

In the future, AI Overviews will be able to understand and respond to queries uploaded in video form; This means that users will be able to record a video of something, ask a question about it, and get help from AI Overviews. Google says this will make it easier to find answers to problems without the need to type detailed text descriptions (Figure B).

AI vs. Google SGE Overviews

Google introduced generative AI to its search platform on October 12, 2023 as part of SGE. It was a Google Search Labs experiment that initially allowed users to generate AI-powered images and text directly from the search bar. Its goal was to provide more creative answers to questions that traditional search results might not fully address.

SGE has since evolved into AI Overviews, which began rolling out to US users on May 14 following the Google I/O 2024 conference. The feature will expand to users around the world throughout the rest of the year. Google's goal is to have AI overviews available to more than one billion people by the end of 2024.

How to access, customize, or disable AI overviews

To access AI overviews, simply do a regular Google search. The AI-generated overview will appear at the top of search results if it's relevant to your query, much like a knowledge panel.

There is currently no default option to completely disable AI overviews. A Google support page notes: “AI overviews are part of Google Search like other features, such as knowledge panels, and cannot be turned off.” In which case, your best bet is to ignore them and focus on traditional search results. If you prefer the usual list of links, you can switch to the Web tab at the top of the Google Search results page. Alternatively, you can go the custom web extension route, in which case we advise caution.

As for customization, Google plans to introduce options that allow users to adjust the complexity of the language used in AI overviews or expand the results it provides. Users will be able to enter a message and select between the original response, a simplified version, or the option to break it down into more detail. This should make AI overviews more useful to a wider range of users, from beginners to experts.

AI Overviews challenges and user response

The launch of Google AI Overviews has not been easy. In late May, Google was asked to review the feature after it returned some questionable results. Google attributed the problems to its artificial intelligence model misinterpreting queries or nuances of language, as well as gaps in quality information available, such as advice on how many stones each day is due. (Note: TechRepublic strongly advises against eating rocks.)

In response, Liz Reid, Google's head of Search, said the company would “continue to improve when and how we display AI overviews and strengthen our protections, even for edge cases.” Improvements include refining AI overviews to better interpret satirical content and meaningless queries, and adding restrictions to prevent AI from triggering in situations where it cannot provide useful information.