Nvidia continues to ride the AI wave as the company sees unprecedented demand for its latest next-generation Blackwell GPU processors. Supply for the next 12 months is exhausted, Nvidia CEO Jensen Huang told Morgan Stanley analysts during an investor meeting.

A similar situation occurred with Hopper GPUs several quarters ago, said Morgan Stanley analyst Joe Moore.

Traditional Nvidia customers are driving overwhelming demand for Blackwell GPUs, including major tech giants such as AWS, Google, Meta, Microsoft, Oracle, and CoreWeave. These companies have already purchased all the Blackwell GPUs that Nvidia and its manufacturing partner TSMC can produce over the next four quarters.

The excessively high demand appears to solidify the continued growth of Nvidia's already formidable footprint in the AI processor market, even with competition from rivals such as AMD, Intel, and several smaller cloud service providers.

“Our view remains that Nvidia is likely to gain share in AI processors in 2025, as the largest users of custom silicon will see very steep ramps with Nvidia solutions next year,” Moore said in a client note, according to TechSpot. “Everything we heard this week reinforced that.”

The news comes months after Gartner predicted that AI chip revenue will skyrocket in 2024.

Designed for large-scale AI deployments

Nvidia unveiled the Blackwell GPU platform in March, praising its ability to “unlock advances in data processing, engineering simulation, electronic design automation, computer-aided drug design, quantum computing and generative artificial intelligence – all emerging opportunities for Nvidia.” ”.

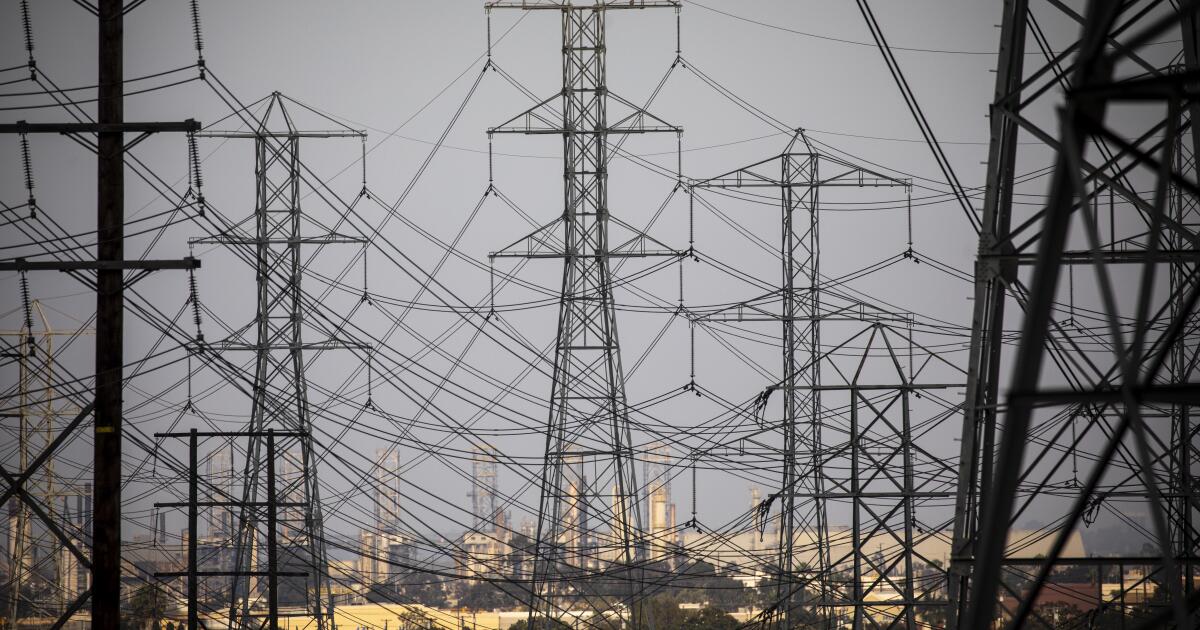

The Blackwell includes the B200 Tensor Core GPU and the GB200 Grace “super chip.” These processors are designed to handle the demanding workloads of large language model (LLM) inference while significantly reducing power consumption, a growing concern in the industry. At the time of its release, Nvidia said the Blackwell architecture adds chip-level capabilities to leverage AI-based preventative maintenance to run diagnostics and forecast reliability issues.

“This maximizes system uptime and improves resiliency so that large-scale AI deployments can run uninterrupted for weeks or even months at a time and reduce operational costs,” the company said in March.

SEE: AMD reveals fleet of chips for heavy AI workloads

Memory problems remain a question

Nvidia resolved the packaging issues it initially faced with the B100 and B200 GPUs, allowing the company and TSMC to ramp up production. Both the B100 and B200 use TSMC's CoWoS-L suite, and there are still questions about whether the world's largest chipmaker has enough CoWoS-L capability.

It also remains to be seen if memory manufacturers can supply enough HBM3E memory for cutting-edge GPUs like Blackwell, as demand for AI-enabled GPUs is skyrocketing. Notably, Nvidia has not yet qualified Samsung's HBM3E memory for its Blackwell GPUs, another factor influencing supply.

Nvidia acknowledged in August that its Blackwell-based products were experiencing poor performance and would need to re-spin some layers of the B200 processor to improve production efficiency. Despite these challenges, Nvidia appeared confident in its ability to ramp up Blackwell production in the fourth quarter of 2024. It expects to ship several billion dollars worth of Blackwell GPUs in the final quarter of this year.

The Blackwell architecture may be the most complex architecture ever built for AI. It exceeds the demands of current models and prepares the infrastructure, engineering, and platform that organizations will need to handle the parameters and performance of an LLM.

Nvidia is not only working on compute processing to meet the demands of these new models, but is also focusing on the three biggest barriers limiting AI today: power consumption, latency, and accuracy. According to the company, the Blackwell architecture is designed to deliver unprecedented performance with improved energy efficiency.

Nvidia reported second-quarter data center revenue of $26.3 billion, up 154 percent from the same quarter a year earlier.