Microsoft Security Copilot, also known as Copilot for Security, will be generally available starting April 1, the company announced today. Microsoft revealed that pricing for Security Copilot will start at $4 per hour, based on usage.

At a press conference held on March 7 at the Microsoft Experience Center in New York (Figure A), we looked at how Microsoft positions Security Copilot as a way for security staff to get real-time assistance with their work and pull data from across Microsoft's entire suite of security services.

Microsoft Security Copilot availability and pricing

Security Copilot was first announced in March 2023 and general early access opened in October 2023. General availability will be worldwide and the Security Copilot user interface is available in 25 different languages. Security Copilot can process prompts and respond in eight different languages.

Security Copilot will be sold through a consumptive pricing model, where customers will pay based on their needs. The use will be divided into security computer units. Customers will be billed monthly for the number of SCUs provisioned per hour at a rate of $4 per hour with a minimum of one hour of use. Microsoft frames this as a way to allow users to start experimenting with Security Copilot and then expand it as needed.

How Microsoft Security Copilot helps security professionals

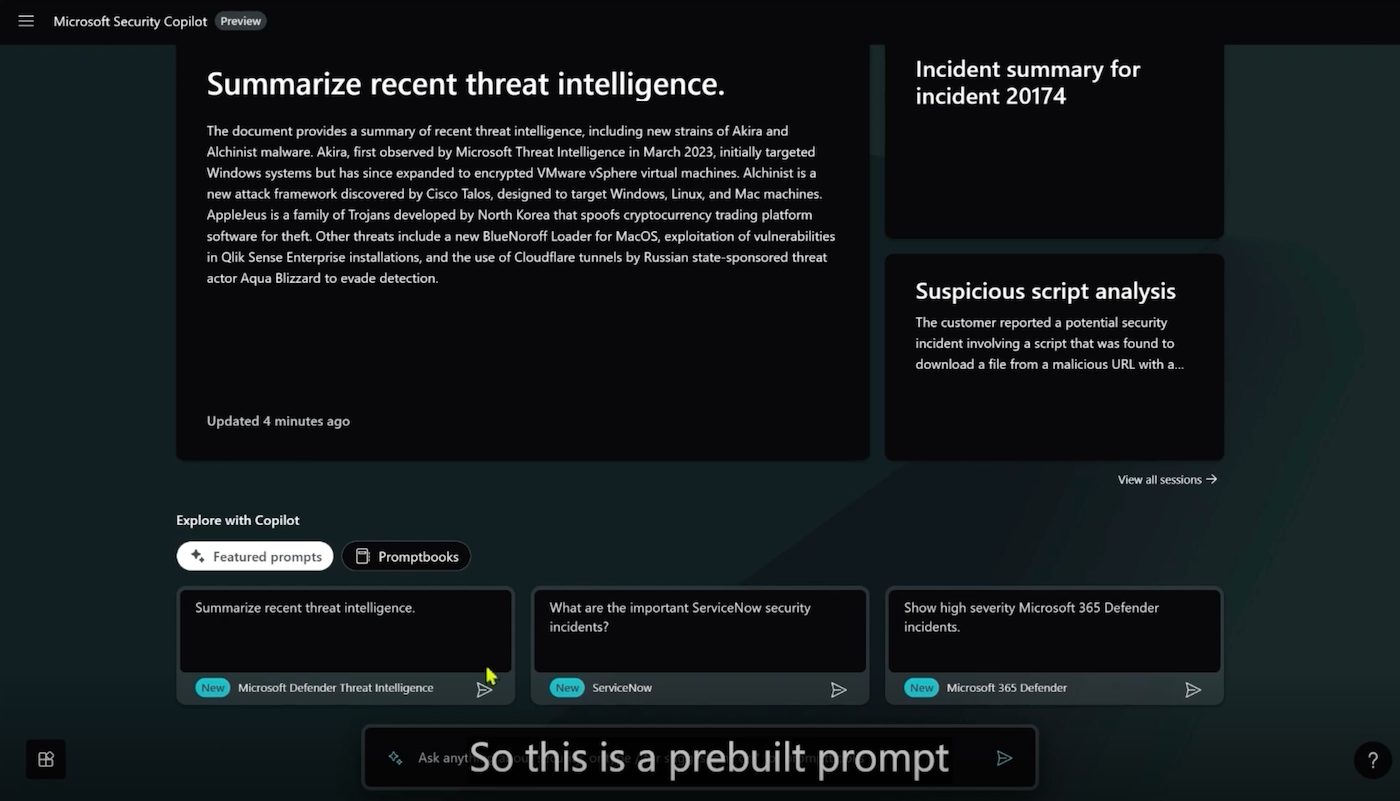

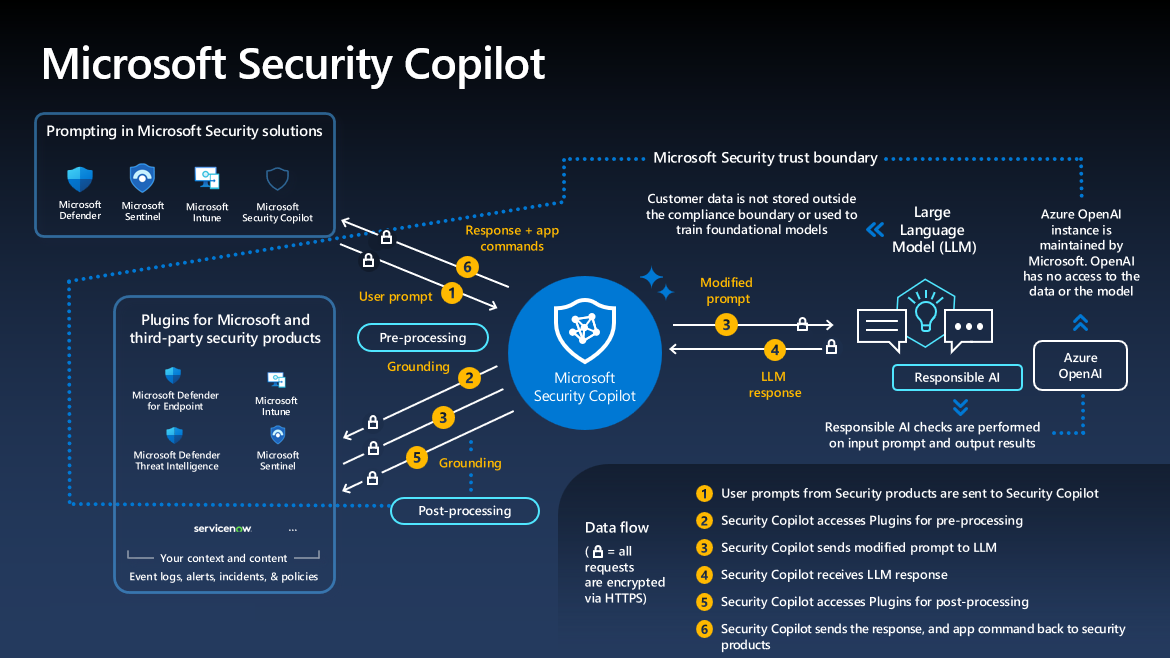

Security Copilot can function as a standalone application that pulls data from many different sources (Figure B) or as a chat window integrated within other Microsoft security services.

Security Copilot offers suggestions for what a security analyst might do next based on a conversation or incident report.

Putting AI in the hands of cybersecurity professionals helps defend against attackers operating in a “ransomware-like-gig-economy” community, Jakkal said.

What sets Microsoft Security Copilot apart from its competitors, Jakkal said, is that it is based on ChatGPT and can use data from a large number of connected Microsoft applications.

“We process 78 trillion signals, which is our new number (compared to previous data), so all of these signals continue, which we call safety grounding. And without these signals, you really can't have a (generative) AI tool, because you need to know these connections, you need to know the path,” Jakkal said.

Jakkal noted that Microsoft is investing $20 billion in security over five years, in addition to separate investments in artificial intelligence.

SEE: NIST updated its Cybersecurity Framework in February, adding a new area of focus: governance. (Technological Republic)

One benefit of Security Copilot's conversational skills is that it can write incident reports very quickly and vary those reports to be more or less technical depending on the employee they are intended for, Microsoft representatives said.

“For me, Copilot for Security is an absolute game-changer for an executive because it allows them a summary (of security incidents). As big a summary as you want,” said Sherrod DeGrippo, director of threat intelligence strategy at Microsoft.

Security Copilot's ability to customize reports helps CISOs bridge the technical and executive worlds, DeGrippo said.

“My view is that CISOs are a different class of executive people,” DeGrippo said. “They want depth. They want to get technical. They want to have their hands there. And they also want to have the ability to move through those executive circles as experts. “They want to be their own experts when they talk to the board, when they talk to their CEO, whatever it is, their CFO.”

Learnings from Security Copilot Private Preview and Early Access

Naadia Sayed, Copilot's senior product manager for security, said that during the private preview and open access periods, partners told Microsoft which APIs they wanted to connect to Security Copilot. Customers with custom APIs found it especially useful that Security Copilot could connect to those APIs. During preview periods, partners were able to tailor Security Copilot to their organization's specific workflows, prompts, and scenarios.

The private preview began with the use of generative AI assistant Copilot for security operations tasks, Jakkal told TechRepublic. From there, customers requested integration of Copilot with other skills, for example, identity-related tasks.

“We are also seeing, on the other hand, that they want to use our security tools for AI management,” Jakkal said.

For example, customers wanted to be sure that another tool like ChatGPT didn't share non-public company information, such as salaries (Figure C).

“One thing we're finding is that people have an increasing appetite for threat intelligence to help direct the use of their resources,” DeGrippo said. “We're seeing customers make resource decisions, such as: Understanding threat priority allows them to say we need to devote more people, attention and time to these particular areas. And achieving that level of resource utilization, prioritization and efficiency has made customers really happy. “That’s why we’re looking to make sure they have those tools.”