Amazon was one of the tech giants that embraced a set of White House recommendations on the use of generative AI last year. The privacy considerations addressed in those recommendations continue to be implemented, with the latest being included in announcements at the AWS Summit in New York on July 9. In particular, the contextual foundation for Guardrails for Amazon Bedrock provides customizable content filters for organizations deploying their own generative AI.

Diya Wynn, responsible AI lead at AWS, spoke to TechRepublic in a virtual briefing earlier today about the new announcements and how companies are balancing the broad knowledge of generative AI with privacy and inclusion.

AWS NY Summit Announcements: Changes to Guardrails for Amazon Bedrock

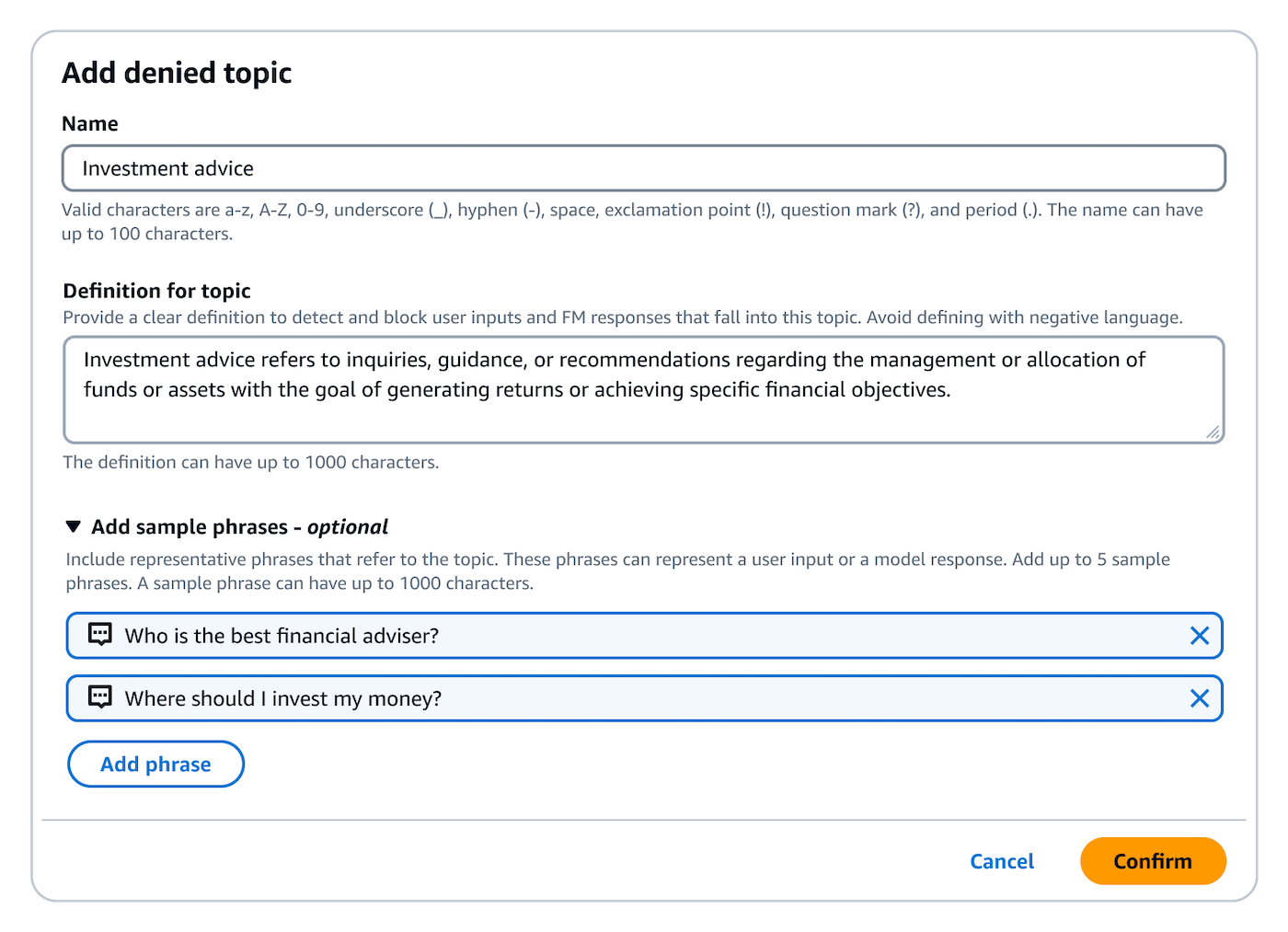

Guardrails for Amazon Bedrock, the security filter for generative AI applications hosted on AWS, has new improvements:

- Users of Anthropic's Claude 3 Haiku preview can now fine-tune the model with Bedrock starting July 10.

- Contextual grounding checks have been added to Guardrails for Amazon Bedrock, which detect hallucinations in model responses for generation and summarization applications with increased recall.

Additionally, Guardrails is expanding to the standalone ApplyGuardrail API, with which Amazon Enterprises and AWS customers can apply safeguards to generative AI applications even if those models are hosted outside of AWS infrastructure. That means app creators can use toxicity filters, content filters, and flag sensitive information they’d like to exclude from the app. Wynn said up to 85% of harmful content can be reduced with custom Guardrails.

Contextual Grounding and the ApplyGuardrail API will be available on July 10 in select AWS Regions.

Guardrails contextual foundation for Amazon Bedrock is part of AWS's broader responsible AI strategy

The contextual foundation ties into AWS’s overall Responsible AI strategy in terms of AWS’s ongoing effort to “advance the science, as well as continue to innovate and provide our customers with services that they can leverage to develop their AI services and products,” Wynn said.

“One of the issues we often hear as a concern or consideration from clients is hallucinations,” he said.

Contextual grounding (and Guardrails in general) can help mitigate that problem. Guardrails with contextual grounding can reduce up to 75% of the hallucinations previously observed in generative AI, Wynn said.

The way customers view generative AI has changed as it has become more common over the past year.

“When we first started out with some of our customer-facing work, customers weren’t necessarily coming to us, right?” Wynn said. “We were, you know, looking at specific use cases and helping support development, but the change over the last year and more has ultimately been that there’s a greater awareness [of generative AI] And that’s why companies are asking and wanting to understand more about the ways we build and the things they can do to ensure their systems are secure.”

That means “addressing issues of bias” as well as reducing safety concerns or AI hallucinations, he said.

Amazon Q Enterprise Assistant Updates and Other AWS NY Summit Announcements

AWS announced a number of new capabilities and product enhancements at AWS Summit NY. Highlights include:

- A developer customization capability in the Amazon Q enterprise AI assistant to secure access to an organization's codebase.

- Adding Amazon Q to SageMaker Studio.

- General availability of Amazon Q Apps, a tool for deploying AI-powered generative applications based on your enterprise data.

- Access to Scale AI on Amazon Bedrock to customize, configure, and tune AI models.

- Vector Search for Amazon MemoryDB – Accelerate the speed of vector searches on vector databases on AWS.

SEE: Amazon recently announced cloud instances powered by Graviton4, which can support AWS's Trainium and Inferentia AI chips.

AWS hits cloud computing training goal ahead of schedule

At its Summit NY, AWS announced that it has met its goal of training 29 million people worldwide in cloud computing skills by 2025, already surpassing that goal. Across 200 countries and territories, 31 million people have taken AWS cloud-related training courses.

AI training and roles

AWS’s training offerings are numerous, so we won’t list them all here, but free cloud computing training has been held around the world, both in-person and online. That includes generative AI training through the AI Ready initiative. Wynn highlighted two roles that people can train for in the new careers of the AI era: programming engineer and AI engineer.

“There won’t necessarily be data scientists involved,” Wynn said. “They’re not training base models. There will maybe be something like an AI engineer.” The AI engineer will refine the base model and add it to an application.

“I think the role of the AI engineer is something that we’re seeing increasing in visibility or popularity,” Wynn said. “I think the other is that there are now people who are responsible for signal engineering. That’s a new role or skill area that’s needed because it’s not as simple as people might think, right? Giving your input or signal, the right kind of context and detail to get some of the details that you might want from a great language model.”

TechRepublic covered the AWS NY Summit remotely.